PingPIng

-

Content Count

12 -

Joined

-

Last visited

-

Days Won

2

Posts posted by PingPIng

-

-

-

16 hours ago, iqrf said:Hello,

is it possible to somehow create a submodule. Something like thisMainModule := TPythonModule.Create(nil); MainModule.ModuleName := 'spam'; SubModule := TPythonModule.Create(nil); SubModule.ModuleName := 'spam.test';

and call in Python

import spam import spam.test spam.function1() spam.test.function2()

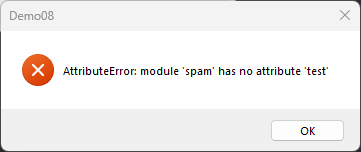

it reports an error

if i do it like this it's fine

import spam import spam.test as T spam.function1() T.function2()

Can it be solved somehow?

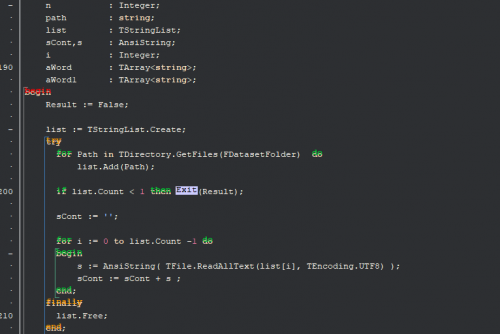

Thanks for the ideas.PythonModule1 := TPythonModule.Create(nil);

PythonModule1.Engine := GetPythonEngine ;

PythonModule1.ModuleName := 'call';

PythonModule1.AddDelphiMethod('preprocess',preprocess,'This function pre-processes data.');

PythonModule1.MakeModule;function Disassembler.preprocess(pself, args: PPyObject): PPyObject; cdecl;

var

op_data, Dis_data: PPyObject;opcode_sequences, disasm_sequences, opcode_input_data, disasm_input_data: Variant;

begin

with GetPythonEngine do

begin

if PyArg_ParseTuple(args, 'OO', @op_data, @Dis_data) <> 0 then

begin

var bm := BuiltinModule();var p_op_data := VarPythonCreate(op_data);

var p_Dis_data:= VarPythonCreate(Dis_data);var b :=bm.type(opcode_tokenizer);

// Preprocess data here as shown above

opcode_sequences := opcode_tokenizer.texts_to_sequences(p_op_data);

disasm_sequences := disasm_tokenizer.texts_to_sequences(p_Dis_data);opcode_input_data := t_tf.tf.keras.preprocessing.sequence.pad_sequences(opcode_sequences, maxlen:=Fopcode_seq_len) ;

disasm_input_data := t_tf.tf.keras.preprocessing.sequence.pad_sequences(disasm_sequences, maxlen:=Fdisasm_seq_len) ;opcode_input_data := t_tf.tf.keras.utils.to_categorical(opcode_input_data, num_classes:=Finput_dim);

disasm_input_data := t_tf.tf.keras.utils.to_categorical(disasm_input_data, num_classes:=Foutput_dim);Result := VariantAsPyObject( TPyEx.Tuple([opcode_input_data, disasm_input_data]) );

end

else

Result := nil;

end;

end; -

the main problem is that there is not a community of programmers who do it as a hobby, and also a lot of projects on github are not carried out because there is no collaboration (unlike all other languages)

-

1

1

-

-

I'm an old delphi user and I don't want to change programming language, but I actually notice little interest in developing in this language, interesting projects are started on github but then there isn't a community to carry them forward and develop them as for other languages.

Then there are some limitations of delphi (operator overloading for classes, memory management etc..) that should be resolved.

I lately consider the possibility of switching to another language for my projects (with regret).

-

procedure LayersTest.TensorFlowOpLayer; var mean : TTensor; adv : TTensor; value : TFTensor; inputs : TFTensors; x : TFTensors; begin var l_layers := tf.keras.layers; inputs := l_layers.Input( TFShape.Create([24]) ); x := l_layers.Dense(128, 'relu').Apply(inputs); value := l_layers.Dense(24).Apply(x).first; adv := l_layers.Dense(1).Apply(x).First; var aAxis : TAxis := 1; mean := adv - tf.reduce_mean(adv, @aAxis, true);; adv := l_layers.Subtract.Apply(TFTensors.Create([adv, mean])).first; var outputs := l_layers.Add.Apply(TFTensors.Create([value, adv])); var model := tf.keras.Model(inputs, outputs); model.OnEpochBegin := On_Epoch_Begin; model.OnTrainBatchBegin := On_Train_Batch_Begin; model.OnEndSummary := On_End_Summary; model.compile(tf.keras.optimizers.RMSprop(Single(0.001)), tf.keras.losses.MeanSquaredError, [ 'acc' ]); model.summary; Assert.AreEqual(model.Layers.Count, 8); var res := model.predict(TFTensors.Create( tf.constant(np.arange(24).astype(np.np_float32)[ [np.newaxis, Slice.All] ]) )); Assert.Istrue(res.shape= TFShape.Create([1, 24])); model.fit(np.arange(24).astype(np.np_float32)[[np.newaxis, Slice.All]], np.arange(24).astype(np.np_float32)[[np.newaxis, Slice.All]],{Batch_Size} -1,{Epochs} 1,{Verbose} 0); end; procedure TestXor; var mModel : Sequential; begin var x := np.np_array<Single>([ [ 0, 0 ], [ 0, 1 ], [ 1, 0 ], [ 1, 1 ] ],np.np_float32); var y := np.np_array<Single>([ [ 0 ], [ 1 ], [ 1 ], [ 0 ] ],np.np_float32); mModel := TKerasApi.keras.Sequential; try mModel.OnEpochBegin := On_Epoch_Begin; mModel.OnTrainBatchBegin := On_Train_Batch_Begin; mModel.OnEndSummary := On_End_Summary; mModel.OnTestBatchEnd := On_Epoch_Begin; mModel.add(tf.keras.Input(2)); mModel.add(tf.keras.layers.Dense(32, tf.keras.activations.Relu)); mModel.add(tf.keras.layers.Dense(64, tf.keras.activations.Relu)); mModel.add(tf.keras.layers.Dense(1, tf.keras.activations.Sigmoid)); mModel.compile(tf.keras.optimizers.Adam, tf.keras.losses.MeanSquaredError, ['accuracy']); mModel.fit(x, y, {batch_size}-1, {epochs}50, {verbose}1); var s := mModel.predict(TFTensors.Create(x), 4).tostring; frmMain.mmo1.Lines.Add(s); finally mModel.free; end; end; procedure TUnitTest_Basic.TFRandomSeedTest; begin var initValue := np.arange(6).reshape(TFShape.create([3, 2])); tf.set_random_seed(1234); var a1 := tf.random_uniform(1); var b1 := tf.random_shuffle(tf.constant(initValue)); // This part we consider to be a refresh tf.set_random_seed(10); tf.random_uniform(1); tf.random_shuffle(tf.constant(initValue)); tf.set_random_seed(1234); var a2 := tf.random_uniform(1); var b2 := tf.random_shuffle(tf.constant(initValue)); Assert.IsTrue(a1.numpy.Equals(a2.numpy)); Assert.IsTrue(b1.numpy.Equals(b2.numpy)); end;

And Other...

-

In progress.....

function TMnistGAN.Make_Generator_model: Model; begin var mModel := TKerasApi.keras.Sequential(nil,'GENERATOR'); mModel.OnEpochBegin := On_Epoch_Begin; mModel.OnTrainBatchBegin := On_Train_Batch_Begin; mModel.OnEndSummary := On_End_Summary; mModel.OnTestBatchBegin := On_Train_Batch_Begin; mModel.Add( layers.Input(TFShape.Create([noise_dim])).first ); mModel.Add( layers.Dense(7*7*256, {activation}nil ,{kernel_initializer}nil, {use_bias}False) ); mModel.Add( layers.BatchNormalization); mModel.Add( layers.LeakyReLU); mModel.Add( layers.Reshape(TFShape.Create([7, 7, 256]))) ; Assert(mModel.OutputShape = TFShape.Create([-1, 7, 7, 256])); mModel.Add( layers.Conv2DTranspose(128, TFShape.Create([5, 5]), TFShape.Create([1, 1]), 'same', {data_format}'', {dilation_rate}nil, {activation}'relu', False)); Assert(mModel.OutputShape = TFShape.Create([-1, 7, 7, 128])); mModel.Add( layers.BatchNormalization); mModel.Add( layers.LeakyReLU); mModel.Add( layers.Conv2DTranspose(64, TFShape.Create([5, 5]), TFShape.Create([2, 2]), 'same', {data_format}'', {dilation_rate}nil, {activation}'relu', False)); Assert(mModel.OutputShape = TFShape.Create([-1, 14, 14, 64])); mModel.Add( layers.BatchNormalization); mModel.Add( layers.LeakyReLU); mModel.Add( layers.Conv2DTranspose(1, TFShape.Create([5, 5]), TFShape.Create([2, 2]), 'same', {data_format}'', {dilation_rate}nil, {activation}'tanh', False)); Assert(mModel.OutputShape = TFShape.Create([-1, 28, 28, 1])); mModel.summary; Result := mModel; end; function TMnistGAN.Make_Discriminator_model: Model; begin var model := TKerasApi.keras.Sequential(nil,'DISCRIMINATOR'); model.OnEpochBegin := On_Epoch_Begin; model.OnTrainBatchBegin := On_Train_Batch_Begin; model.OnEndSummary := On_End_Summary; model.OnTestBatchBegin := On_Train_Batch_Begin; model.Add( layers.Input(img_shape).first ); model.add(layers.Conv2D(64, TFShape.Create([5, 5]), TFShape.Create([2, 2]), 'same')); model.add(layers.LeakyReLU); model.add(layers.Dropout(0.3)); model.add(layers.Conv2D(128, TFShape.Create([5, 5]), TFShape.Create([2, 2]), 'same')); model.add(layers.LeakyReLU); model.add(layers.Dropout(0.3)) ; model.add(layers.Flatten) ; model.add(layers.Dense(1)); model.summary; Result := model; end;

-

https://github.com/Pigrecos/TensorFlow.Delphi

TensorFlow.Delphi provides a Delphi(Pascal)Standard binding for tensorflow It aims to implement the complete Tensorflow API in Delphi which allows Pascal developers to develop, train and deploy Machine Learning models with the Pascal Delphi(porting to free pascal in the future).

Note: This is a work-in-progress. please treat it as such.Pull request are welcome

-

4

4

-

5

5

-

-

-

-

https://github.com/Pigrecos/Keras4Delphi/tree/master/src/NumPy

In my partial conversion, there is something about it-

1

1

-

-

Hello everybody,

These are some projects I have created.

I have very little time to manage them all continuously so if anyone wants to collaborate or want to make pull requests, they are welcomeKeras4Delphi is a high-level neural networks API, written in Pascal(Delphi Rio 10.3) with Python Binding and capable of running on top of TensorFlow, CNTK, or Theano. Based on Keras.NET and Keras

https://github.com/Pigrecos/Keras4Delphi

Binary Code generator Written in pascal. It can generate native code for x86 and x64 architectures and supports the whole x86/x64 instruction set. Assembly Code Generator able to compile single File or Single asm Command.

https://github.com/Pigrecos/D_CodeGen

symbolic execution whith delphi. The Triton Dynamic Binary Analysis (DBA) framework - by JonathanSalwan binding (## experimental ##) for Delphi

https://github.com/Pigrecos/Triton4DelphiCode Deobfuscator x86_32/64

Dead code removal

Peephole optimization

remove Multibranch Protection

..More

https://github.com/Pigrecos/CodeDeobfuscatorAnd other....

thanks a lot 😉

-

2

2

-

6

6

-

![Delphi-PRAXiS [en]](https://en.delphipraxis.net/uploads/monthly_2018_12/logo.png.be76d93fcd709295cb24de51900e5888.png)

Library for modifying windows PE files?

in General Help

Posted · Edited by PingPIng

original source:

https://github.com/vdisasm/pe-image-for-delphi

license

https://github.com/vdisasm/pe-image-for-delphi/blob/master/LICENSE

........!!!!......!!!!