Emanuel Silva

-

Content Count

2 -

Joined

-

Last visited

Posts posted by Emanuel Silva

-

-

Hello guys!

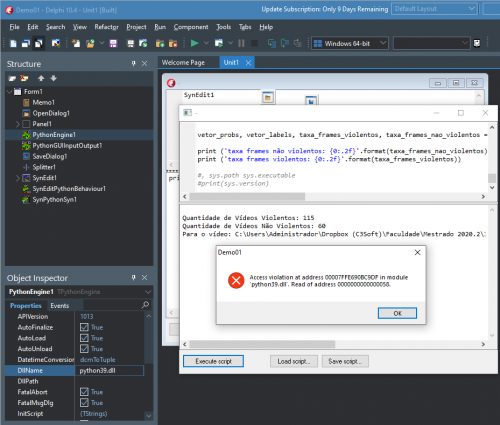

I'm using Delphi 10.4.2 and Python4Delphi with Python 3.9.2 Win64

I have the error "Access Violation at address in module python39.dll"

the error continues even in the property of PythonEngine.DllName = python39.dll

and

PythonEngine.DllName = C:\Users\Administrator\AppData\Local\Programs\Python\Python39

a curiosity is that my code is running in VS Studio.

can anybody help me ? Thanks

my code:

unit Unit1; interface uses Classes, SysUtils, Windows, Messages, Graphics, Controls, Forms, Dialogs, StdCtrls, ComCtrls, ExtCtrls, PythonEngine, Vcl.PythonGUIInputOutput, SynEditHighlighter, SynEditCodeFolding, SynHighlighterPython, SynEditPythonBehaviour, SynEdit; type TForm1 = class(TForm) Memo1: TMemo; Panel1: TPanel; Button1: TButton; Splitter1: TSplitter; Button2: TButton; Button3: TButton; OpenDialog1: TOpenDialog; SaveDialog1: TSaveDialog; PythonGUIInputOutput1: TPythonGUIInputOutput; SynEdit1: TSynEdit; SynEditPythonBehaviour1: TSynEditPythonBehaviour; SynPythonSyn1: TSynPythonSyn; PythonEngine1: TPythonEngine; procedure Button1Click(Sender: TObject); procedure Button2Click(Sender: TObject); procedure Button3Click(Sender: TObject); private { Déclarations privées } public { Déclarations publiques } end; var Form1: TForm1; implementation {$R *.DFM} procedure TForm1.Button1Click(Sender: TObject); begin GetPythonEngine.ExecString(UTF8Encode(SynEdit1.Text)); end; procedure TForm1.Button2Click(Sender: TObject); begin with OpenDialog1 do begin if Execute then Memo1.Lines.LoadFromFile(FileName); end; end;

code python:

import sysconfig import sys from cv2 import cv2 import pathlib import os import numpy as np import matplotlib.pyplot as plt from PIL import Image, ImageDraw import random import shutil import zipfile from tqdm import tqdm import torch import torchvision from torch.utils.data import DataLoader, Dataset from torchvision.datasets import ImageFolder from torchvision import transforms import random from time import time from torch.utils.data import random_split caminho_do_modelo_no_google_drive = r'C:\Users\300.pt' model = torch.load(caminho_do_modelo_no_google_drive, map_location='cpu') lista_violento = list(pathlib.Path(r'C:\cam2').glob('*')) lista_nao_violento = list(pathlib.Path(r'C:\cam1').glob('*')) print ('Quantidade de Vídeos Violentos:',len(lista_violento)) print ('Quantidade de Vídeos Não Violentos:',len(lista_nao_violento)) transformer = transforms.Compose([ transforms.Resize(150), transforms.ToTensor(), transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]) ]) vetor_classes = ['non-violent', 'violent'] def retirar_frame_a_frame_e_fazer_predicoes_e_plotar(path_video, y_true): violent_count = 0 non_violent_count = 0 contador = 0 vetor_probs = [] vetor_labels = [] vetor_imagens = [] cap = cv2.VideoCapture(path_video) while (cap.isOpened()): ret, frame = cap.read() if (ret == False): break img = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB) img_pil = Image.fromarray(img) vetor_imagens.append(img_pil) img_tensor = transformer(img_pil) img_tensor.unsqueeze_(0) resultado = model.forward(img_tensor) probs = torch.exp(resultado) probs = probs.max(1) probabilidade = probs[0].detach().numpy()[0] vetor_probs.append(probabilidade) label_predita = probs[1].detach().numpy()[0] vetor_labels.append(label_predita) contador += 1 if (label_predita == 1): violent_count += 1 else: non_violent_count += 1 taxa_frames_violentos = 100*violent_count/contador taxa_frames_nao_violentos = 100*non_violent_count/contador plt.figure(figsize=(15, 10)) for k in range(9): plt.subplot(3, 3, k+1) n = random.randint(0, len(vetor_probs)-1) img = vetor_imagens[n] label = vetor_labels[n] plt.imshow(img) plt.xticks([]) plt.yticks([]) plt.title('Real: {0}\nPredito: {1}'.format(vetor_classes[y_true], vetor_classes[label])) plt.show() return np.array(vetor_probs), np.array(vetor_labels), taxa_frames_violentos, taxa_frames_nao_violentos model.to('cpu') model.eval() video = str(random.choice(lista_nao_violento)) print ('Para o vídeo: ' + video) vetor_probs, vetor_labels, taxa_frames_violentos, taxa_frames_nao_violentos = retirar_frame_a_frame_e_fazer_predicoes_e_plotar(video, 1) print ('taxa frames 1: {0:.2f}'.format(taxa_frames_nao_violentos)) print ('taxa frames 2: {0:.2f}'.format(taxa_frames_violentos)) #, sys.path sys.executable #print(sys.version)

![Delphi-PRAXiS [en]](https://en.delphipraxis.net/uploads/monthly_2018_12/logo.png.be76d93fcd709295cb24de51900e5888.png)

Access Violation python39.dll

in Python4Delphi

Posted

Thank you very much for your attention ...

It worked with MaskFPUExceptions in link:

https://github.com/pyscripter/python4delphi/wiki/MaskFPUExceptions