-

Content Count

2854 -

Joined

-

Last visited

-

Days Won

156

Posts posted by Anders Melander

-

-

3 hours ago, David Schwartz said:I think in pictures, and I've never seen any good illustrations about what goes on when you're working in git.

First of all: Get a GUI Git client. I rarely use the command line during my daily work.

I came from Svn and found the transition to Git very confusing because the terminology is different and because you're basically working with two repositories (the local and the one on the server) at once.

What Git server do you use?

4 hours ago, David Schwartz said:I don't understand what's meant by "use pull requests to merge into Master".

A pull request is just a request to merge a branch into another. The "pull" is from the perspective of the target so it's a request that the target pull the supplied changes.

I'm guessing the way you work now is something like this:

- Create a local branch.

- Work on the files in the branch.

- Commit changes to the branch.

- Merge the branch into Master.

- Push Master to the server.

With a pull request you would work like this:

- Create a branch (either locally or on the server, doesn't matter).

- Work on the files in the branch.

- Commit changes to the branch.

- Push the branch to the server.

- Create a pull request on the server.

- Review the pull request on the server (should be done by someone else). Accept or Reject the pull request.

- Apply the pull request on the server thereby merging the branch into Master.

4 hours ago, David Schwartz said:Nobody likes that we do work in one folder and git is constantly shuffling around files in other folders, and throwing up conflicts because someone else is in the middle of doing some work that has nothing to do with what you're working on

I'm still not sure I understand the problem but I'll try with a solution anyway.

Let's say your main project is in the Projects repository and that you have this mapped locally to C:\Project.

Your client data is in the Clients repository and you have this mapped to I:\.

Within the Projects repository you have a branch for each client and the same for the Clients repository.

The Master branch of each repositories contains the changes that are common for all clients and the individual client branches contains the client specific stuff.

When you make changes to Master you will have to merge that back into each of the client branches to keep them up to date. You can create a script that automates this task.

Now if you make Clients a submodule of Projects then you can make the individual project branches track the corresponding branch in Clients. This means that when you check out a Projects branch then the corresponding Clients branch will automatically be checked out as well so your I:\ data will contain the correct files.

-

1

1

-

6 hours ago, pyscripter said:However I got an error message saying:

When did you get that message? On package install?

I don't have 10.4.1 installed at the moment (tested the uninstaller yesterday and haven't had time to install again) so I can't try myself.

-

I can't see anything in what you describe that is out of the ordinary. Looks like a pretty standard workflow to me.

One thing I would do is make sure that very few people have rights to push to Master or whatever you call your primary branch. Instead use pull requests to merge into Master. This avoids the situation where someone forces a push and rewrite history and then later claim that "git must have messed something up".

I'm not sure what to do about your I: drive if you really want to have all the files there at all time, regardless of the branch you're working on. If you're okay with pulling the files for a given branch to I: when you work on that branch, then you could reference you I: repository as a submodule in your main Git project. Then each customer could get their own branch in the I: repository.

HTH

-

1

1

-

-

1 hour ago, ntavendale said:Strangely though, they left Classic Undocked as an option in the drop down used to select the layout, both in the Min IDE form and in the options

I believe this is mentioned in the release notes:

QuoteDesktop layouts which cannot be applied (i.e. are floating layouts, no longer supported, see above) will be listed in gray in the Desktop Layout combo box in the title bar. When a floating layout is applied, the IDE will instead show a dialog.

-

1 minute ago, Sherlock said:Yes. It has been this way for some time now. I just trusted them to stick to that plan.

Well that's what I get for actually reading the installation instructions.

-

3

3

-

-

1 minute ago, Sherlock said:It's on the very first dialogue.

I'm positive I didn't get that one.

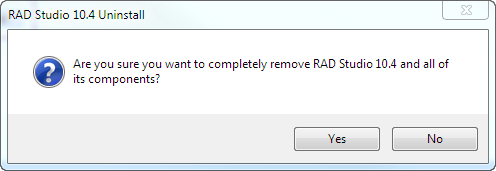

I just now tried running the 10.4.1 uninstaller to see if that dialog would appear. First I get an UAC warning because the uninstaller is unsigned. Then I get this one:

And clicking on Yes starts the uninstall so now I have to install again. Damned!

Was I meant to just run the 10.4.1 installer and have that perform the uninstall?

Here's what the 10.4.1 release notes say:

QuoteIf you have already installed 10.4 Sydney (May 26th, 2020), installing 10.4 Sydney - Release 1 requires a full uninstall and reinstall. As part of the uninstall process, you will see an option for preserving your settings. This is enabled by default.

Strictly speaking, the first sentence states that I should uninstall 10.4 and then install 10.4 again, but I'm assuming that wasn't what they meant.

-

21 minutes ago, dummzeuch said:EDIT: Apparently the option to install to a custom path is gone.

No. It's just very easy to miss. Bad installer UI.

23 minutes ago, dummzeuch said:The Web-Installer installed to c:\program files (x86) even though my 10.4 installation was somewhere else.

The 10.4.1 release notes stated that there would be an option in the 10.4 uninstaller to keep the settings, but I didn't see any.

-

2

2

-

-

59 minutes ago, Sonjli said:I DO use COM events... so, should I change COINIT_MULTITHREADED in COINIT_APARTMENTTHREADED?

It depends. If you are sure that your event handlers are thread safe then COINIT_MULTITHREADED is fine. Otherwise change it to COINIT_APARTMENTTHREADED.

1 hour ago, Sonjli said:Can this make server unstable?

Well if you code isn't thread safe then anything can happen but I think it's more likely that the missing CoUninitialize is the cause.

-

1

1

-

-

2 hours ago, David Heffernan said:Lightweight MREW sounds useful. One does wonder if it works

I just checked the source: On Windows it's just a wrapper around the Windows SRW lock.

https://docs.microsoft.com/en-us/windows/win32/sync/slim-reader-writer--srw--locks

-

There's no need to post the issues that weren't fixed when we have a list of those that (allegedly) was.

-

1

1

-

1

1

-

-

6 minutes ago, Lars Fosdal said:I wonder what kind of effect that has on f.x. FireDAC?

CoInitialize etc. only affects the calling thread. If you access FireDAC from a thread, and you have called CoInitialize on that thread, then any inbound COM will be executed on the main thread (i.e. single threaded apartment a.k.a. apartment threaded).

I'm guessing you are only doing outbound COM so the threading model shouldn't matter.

-

38 minutes ago, Mike Torrettinni said:I was able to run demo exe.

And now demo.exe is running you

-

3

3

-

-

6 minutes ago, Lars Fosdal said:I checked our sources. We normally never use CoInitializeEx, explicitly, just CoInitialize, but I guess that depends on the requirements of the OLE control.

https://docs.microsoft.com/en-us/windows/win32/api/objbase/nf-objbase-coinitialize

QuoteCoInitialize ... Initializes the COM library on the current thread and identifies the concurrency model as single-thread apartment (STA).

New applications should call CoInitializeEx instead of CoInitialize.

And the documentation for CoInitializeEx states:

QuoteBecause OLE technologies are not thread-safe, the OleInitialize function calls CoInitializeEx with the COINIT_APARTMENTTHREADED flag. As a result, an apartment that is initialized for multithreaded object concurrency cannot use the features enabled by OleInitialize.

-

1

1

-

-

36 minutes ago, Lars Fosdal said:Seriously...

Why is that man trying to swallow an invisible shoe?

-

3

3

-

-

8 minutes ago, FPiette said:CoInitializeEx should be called only once. Better place is at program startup.

It's a service application so the COM stuff is presumably done in a thread. The CoInitializeEx/CoUninitialize should be done in the thread.

Apart from it being inefficient there's nothing wrong with looping and wrapping the server interaction with local calls to CoInitializeEx/CoUninitialize inside the loop. Outside the loop would be much more efficient of course but it might be a good idea to start again with a fresh, clean COM environment after the server has disconnected the client.

-

34 minutes ago, Sonjli said:How does the server disconnect the client?

- Mistery. It's a third party server. Poor documentation

It's probably using CoDisconnectObject

-

Okay. Let's assume that the problem lies with the client.

Apartment threading just means that ingoing COM calls are executed on the main thread. This is like using TThread.Synchronize to ensure that code that isn't thread safe is executed in the context of the main thread. If your code is thread safe or if you are sure that you're not using callbacks (e.g. COM events) then COINIT_MULTITHREADED is probably fine.

The missing CoUninitialize will affect the client but assuming that you clear all references to server interfaces on disconnect then I can't see how that would affect the server.

So your code logic should go something like this:

CoInitializeEx(...); try Server := ConnectToServer; try Server.DoStuffWithServer; finally Server := nil; end; finally CoUninitialize; end;

-

14 minutes ago, Lars Fosdal said:CoInit/CoUnInit should run only once per thread.

https://docs.microsoft.com/en-us/windows/win32/api/combaseapi/nf-combaseapi-coinitializeex

QuoteCoInitializeEx must be called at least once, and is usually called only once, for each thread that uses the COM library. Multiple calls to CoInitializeEx by the same thread are allowed as long as they pass the same concurrency flag, but subsequent valid calls return S_FALSE. To close the COM library gracefully on a thread, each successful call to CoInitialize or CoInitializeEx, including any call that returns S_FALSE, must be balanced by a corresponding call to CoUninitialize.

Anyway, that's on the client and it shouldn't affect the server.

-

1

1

-

-

2 minutes ago, Dalija Prasnikar said:Not really. This is mostly opinion based question unless there is exact, very narrow problem you need to solve.

Maybe. Since the OP hasn't really stated what his problem is I can't tell for sure. It sounds like he's asking about adapting Git to his workflow or vice versa, which I think is a topic suitable for SO. It depends on how you phrase your question.

Regardless there's a good chance he would be told to ask a proper question if he tried SO - or maybe just downvoted but that's also kinda an answer.

-

I think you need to post some more code and some more details.

- Does the OleCheck ever raise an exception? If so how do you handle this exception?

- Are you sure that you shouldn't be using COINIT_APARTMENTTHREADED instead?

- Are you sure there's a CoUninitialize for every CoInitializeEx?

- How does the server disconnect the client?

- Have you tried debugging the server to determine what it's doing when it "hangs"?

-

1

1

-

10 minutes ago, David Schwartz said:The stuff tends to fail early within 90 days; or it starts dying at around it's projected MTBF. But fully half of it will last 3-5x it's rated MTBF. That's statistics for you.

Are you aware what the M in MTBF stands for?

And it's not the statisticians that are making these claims we are talking about. It's the companies producing the devices, based on their tests, and it's the people that have the experience to back the claims. And yes, they're "experts". Because they know what they're talking about. Like Wozniak. Unlike Jobs.

-

1

1

-

-

4 minutes ago, aehimself said:physically remove and transport disks between houses on a regular basis for years

I'm doing incremental backup and I rotate media every one or two weeks so it's not that bad. The "other house" is right next to the main building and the cabinet is in my workshop, right next to where I keep the beer, so it's nice to have an excuse to "go file the backup".

-

1

1

-

1

1

-

-

8 minutes ago, Bill Meyer said:Thermal wear, yes, but not because of power cycles, which are indeed another stress.

What I meant was wear from thermal expansion and contraction.

There are lots of other factors to consider but it's kinda pointless to explain in detail when you're up against SDs and Raspberry Pis.

-

15 minutes ago, aehimself said:That is not possible. How are you writing data on a media, which is not physically in a drive?

Again, I am strictly talking about home-use "hacky" but safe-enough solutions.

I of course meant that the two should be separate after the backup has been made. Not during.

At home I'm doing rotating backups to disk. The disks are stored in a non-fireproof cabinet in another building. I'm betting that both buildings don't burn down (or are towed away by "burglers") at the same time. I live in a thatched house so it's not like I'm fireproof in any way.

![Delphi-PRAXiS [en]](https://en.delphipraxis.net/uploads/monthly_2018_12/logo.png.be76d93fcd709295cb24de51900e5888.png)

10.4.1 Update

in General Help

Posted

I would suggest you do all these tests without 3rd party add-ons involved. It hard to know where to place the blame otherwise.