-

Content Count

2850 -

Joined

-

Last visited

-

Days Won

155

Posts posted by Anders Melander

-

-

48 minutes ago, David Schwartz said:Well, I guess it's a matter of perspective.

Sure.

If you want reliable backup use a media that has been designed for it. Floppies, writable CDs and DVDs, cheap HDDs, SDDs and SDs aren't reliable.

I have TK-50 DLT tapes from the late eighties that can still be read (each tape contains a whopping 94Mb). The tape format is standard and the drive is SCSI so it's no problem finding a way to read them - and the data is still there. Try that with any other storage type after thirty years (stone tablets and punch cards excepted).

53 minutes ago, David Schwartz said:Whatever you might think of SDs, I guarantee they won't decompose the way old magnetic media does over time.

I think it pretty irresponsible to make a statement like that. You can't guarantee anything like that and I'm pretty sure you will not take financial responsibility when your guarantee turns out to be false. The companies that make these devices doesn't even make such claims. It is known that SDD and SD degrade over time. Their good MTBF is only valid when they are new.

-

1

1

-

-

14 minutes ago, Mr. Daniel said:I manage to install SynEdit as 32 bit build (thank you all for that), but I don't get the Install option when I build with 64 bit target platform.

Design-time packages are always 32-bit only since the Delphi IDE is 32-bit.

You don't need the package installed to build. You need them installed to have design-time support. E.g. being able to drop SynEdit controls and components on forms, set their properties at design time etc.

-

9 hours ago, David Schwartz said:Spinning HDDs are <5 yrs, esp. if they're in a NAS that runs continuously.

Oh, and another reason why this is wrong is that it's better for HDDs to run continuously. It's the power cycles that kills them (thermal wear).

-

2

2

-

-

On 8/29/2020 at 5:44 PM, pyscripter said:If you care about speed forget about TXMLDocument

If you care about speed use a SAX parser instead. The DOM model has different priorities.

-

1

1

-

-

21 hours ago, FPiette said:Are you using RAID? Most today's mainboards have a basic RAID controller and if yours don't have one, buy one

It's a misconception that RAID is a means to better data security. RAID is a means to better performance or higher availability.

8 hours ago, David Schwartz said:Spinning HDDs are <5 yrs, esp. if they're in a NAS that runs continuously.

If you buy cheap drives or cheap NAS with cheap drives in them then you get what you pay for. No surprise there really.

The HDDs in my system are 16 years old and some of them have been running almost continuously. They're Western Digital WD5000YS drives with a MTBF of 137 years. I have two drives mounted internally and five drives in a hot-swap rack on the front for use as backup storage. Of course I also have a couple of SDDs for the performance critical stuff.

HDDs have better reliability than SDDs and they are better for long term archival. While a brand new SDD might have better MTBF values than a HDD this dramatically changes with time as the drive is used. After only a few years the SDD will have deteriorated by a magnitude to have much worse MTBF than the HDD.

8 hours ago, David Schwartz said:Honestly, the price of SD cards is getting so low that you could make rotating backups just from a handful of them. I see Walmart is advertising 64GB Class 10 microSDs for $5. I see an outfit named Wish.com that's selling 1TB Class 10 UHS-1 TF microSDs for $7.64. SD cards aren't nearly the speed of an SSD like Samsung T5's but for backing up source files on a daily basis I don't think the speed differences would even be noticeable.

So you're good with relying on the cheapest available devices to save you when your primary storage fails? Interesting.

Personally I would prefer a reliable backup medium so that I could afford to use faster, but less reliable, primary storage devices.

-

2

2

-

-

1 hour ago, Mike Torrettinni said:This seems to be the winner, so far.

Good choice. Keep it simple.

-

1

1

-

-

It's trivial to implement and then you get it just like you want it.

-

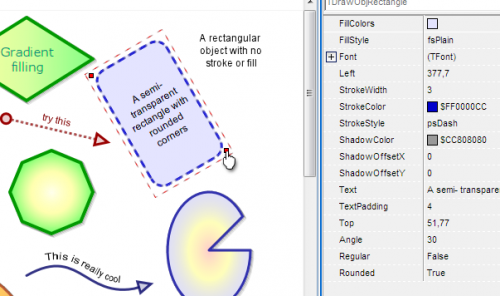

Instead of reinventing the wheel why not try one of the many existing solutions.

For example Graphics32 or Image32. Here's a shot of an old demo of the GR32_Objects unit:

and here's one of a similar Image32 demo:

-

2 hours ago, aehimself said:zip.Read(0, zipstream, header); Try zip.Close; SetLength(Result, zipstream.Size); zipstream.Read(Result, zipstream.Size); Finally FreeAndNil(zipstream); End;

...or the shorter version (yes, I know you said it):

zip.Read(0, Result);

-

55 minutes ago, John Terwiske said:It's about 6% slower vs. the third party solution I'm currently using, and this matters with these files that are in the 2Gbyte range.

One thing you can do to make it a bit faster is to read directly from the decompression stream (SourceStream in my example) instead of copying it to a memory stream. AFAIR the decompression stream buffers internally and is bidirectional so you should be able to treat it as a memory stream.

-

35 minutes ago, Javier Tarí said:The easiest way to check if a package can be loaded, is just loading it

That only resolves inter-package dependencies. Not application dependencies.

-

15 minutes ago, aehimself said:FreeAndNil

Tsk, tsk.

-

1

1

-

-

12 minutes ago, John Terwiske said:I don't see how this extracts a single file to a stream of some sort

Something like this:

var ZipFile: TZipFile; SourceStream: TStream TargetStream: TStream; LocalHeader: TZipHeader begin ... TargetStream := TMemoryStream.Create; try ZipFile.Read('foobar.dat', SourceStream, LocalHeader); try TargetStream.CopyFrom(SourceStream, 0); finally SourceStream.Free; end; ...do something with TargetStream... finally TargetStream.Free; end; end;

19 minutes ago, John Terwiske said:at the time as I recall there was a problem with unicode characters in the items within the zip file

There used to be a problem with unicode in comments but I believe that has been fixed.

-

4 minutes ago, aehimself said:and replacing one file inside the ZIP archive.

Yeah, that one is really annoying. I used to have a class helper that added TZipFile.Delete and Remove methods but one of the Delphi versions after XE2 broke that one as the required TZipFile internal data structures are no longer accessible to class helpers.

-

1

1

-

-

1 hour ago, Lars Fosdal said:Who knows.

Who knows what?

-

21 minutes ago, John Terwiske said:What if there are multiple files within the Zip archive? I need to extract a specific item within a Zip file.

Have you read the help?

- Extract to stream: http://docwiki.embarcadero.com/Libraries/Rio/en/System.Zip.TZipFile.Read

- Extract to file: http://docwiki.embarcadero.com/Libraries/Rio/en/System.Zip.TZipFile.Extract

-

1

1

-

34 minutes ago, Lars Fosdal said:I see that the Rich Edit control has "moved" - not sure if the old wrapper supports 4.1?

I can't see how that is relevant. Just because new control versions are added doesn't mean the old ones stop working.

TRichEdit is a wrapper around the RICHEDIT20W window class (i.e. RICHED20.DLL). Override CreateParams to use another version if that's important.

-

3 hours ago, David Schwartz said:I searched in Google for an example of something built with a TRichEdit in Delphi, but didn't have much luck.

If you need an example of what TRichEdit can do just run Wordpad.

If you need examples of how to do it read the help or Google it. There are hundreds of examples. If you have an older version of Delphi installed there's even a richedit example project.

I don't see the problem.

-

1 hour ago, Larry Hengen said:Is there anyway to detect interface breaking changes automatically

I would think that the compiler would do that for you - i.e. give you a compile or linker error.

I must admit that my experience with run-time packages are a couple of decades old but AFAIR the dcu files of the packages are stored in a dcp file. If the linker uses the dcp file instead of the dcu files then a interface change should result in a linker error - but I might of course be remembering this completely wrong.

1 hour ago, Larry Hengen said:Is there anyway to detect interface breaking changes automatically (that it could be put on a build server)?

Since run-time packages are just statically linked DLLs I believe you just need to determine if all DLL dependencies can be resolved. It would be trivial to create a small utility that loaded you application with LoadLibraryEx and have that do it, but unfortunately LoadLibraryEx only resolves dependencies when you load DLLs.

Try Dependency Walker instead. It has a command line mode that you can probably use. I don't know what the output looks like though.

1 hour ago, Larry Hengen said:Any advice on maintaining apps using run-time packages?

Get rid of them (the packages, not the apps).

-

2 minutes ago, TurboMagic said:Would that be correct?

Move isn't the problem in this particular case. The problem is that once you logically remove an entry from the array then you need to finalize that entry in the array to clear the reference. You can do that by assigning Default(T) to the entry.

-

2 minutes ago, TurboMagic said:Why would those have to be cleared?

Because otherwise the buffer will still hold a reference to the T instance. For any managed type this will be a problem.

For example what happens if T is an interface?

Maybe some unit testing is in order...

-

3 hours ago, Stefan Glienke said:Leave implementing generic collections to people experienced with it

...and how did these people get their experience?

-

-

Can someone explain why this fails to compile with E2029 Expression expected but 'ARRAY' found:

begin var Stuff: array of integer; end;

while this works:

type TStuff = array of integer; begin var Stuff: TStuff; var MoreStuff: TArray<integer>; end;

![Delphi-PRAXiS [en]](https://en.delphipraxis.net/uploads/monthly_2018_12/logo.png.be76d93fcd709295cb24de51900e5888.png)

Securing your data over time

in General Help

Posted

And if everything else fails there's always the NSA to fall back on.

https://www.cnet.com/news/guess-what-happened-when-backblaze-tried-using-the-nsa-for-data-backup/

https://o.canada.com/technology/internet/irony-alert-google-labels-nsa-data-centre-backup-service