A.M. Hoornweg

-

Content Count

494 -

Joined

-

Last visited

-

Days Won

9

Posts posted by A.M. Hoornweg

-

-

24 minutes ago, Lajos Juhász said:As a result it can not be assigned to an event.

Exactly.

-

1 hour ago, dummzeuch said:Does that compile?

Just declare

class procedure MyFormShow(Sender: TObject); STATIC;That way the method does not have a hidden "self" parameter. Having no "self" parameter turns the class method into a regular procedure inside the namespace tTest (it is no longer a "procedure of object").

Static class methods are unable to access any members or methods that require a "self" parameter.

-

11 hours ago, Stefan Glienke said:First, it's wrong to assume that all local variables reside on the stack, depending on optimization they might be in registers. Second, rep stosd is terribly slow for small sizes (see https://stackoverflow.com/a/33485055/587106 and also the discussions on this issue https://github.com/dotnet/runtime/issues/10744)

Also, the Delphi compiler arranges managed local variables into one block that it zeroes (in many different and mostly terribly inefficient ways).

I for one would find it an advantage if stuff like local pointer variables would default to NIL, it would make the language just a little bit more memory safe.

-

2 hours ago, Stefan Glienke said:Why should the CPU waste time zeroing stuff that will be set shortly after anyway? Compilers are there to warn/error when any code path leads to a situation where a variable is not initialized.

As far as I know, when an object is created all its members are filled with zero. And for stack variables, all managed types are filled with zero upon entry of a method.

However, wouldn't it actually save CPU time if Delphi simply wiped the local variables area of the stack upon entry of a method (a known fixed number of bytes - a simple REP STOSD will do) instead of determining which local variables are managed types and then wiping those individually?

-

On 10/29/2024 at 11:04 AM, Dalija Prasnikar said:It compiles for me... but after adding missing quotes in interface GUID declaration. TParallelArray is not the problem here.

This is a totally weird experience. Tuesday my compiler kept saying "There is no overloaded version of tParallelArray.For ... that can be called with these arguments" and I searched in vain for the cause.

Then something came up and I had to abandon the project for a few days. Today I resumed working on it and it compiled just fine - I had changed nothing!

I think that something got corrupted in the memory of the IDE process on Tuesday and the reboot just made it go away... I do have regular exceptions in the IDE since installing the inline update for 12.2 (build nr 29.0.53982.0329).Anyway, I'm thrilled that I can continue now.

-

Hello all,

just a short question: is System.Threading.tParallelarray not usable with interfaces? The following won't compile:

Type iDownloadJob=Interface [{3d24b7a2-6111-46e7-a281-7fdc318be5c4}] procedure Download; end; procedure test; var x: array of iDownloadJob; temp:Integer; begin // ..... fill the array here .... temp:=tParallelArray.ForThreshold; tParallelArray.ForThreshold:=1; try tParallelArray.&For<iDownloadJob>(x, procedure (const AValues: array of iDownloadJob; AFrom, ATo: NativeInt) var i:NativeInt; begin for i:=AFrom to ATo do aValues[i].Download; end); finally tParallelArray.ForThreshold:=temp; end; end;

It would be just my luck if such a nice new feature doesn't work for me 😞 .

-

Am I right that the the internal source code formatter is no longer there?

It is still documented as a feature on Embarcadero's site though:https://docwiki.embarcadero.com/RADStudio/Athens/en/Source_Code_Formatter

Is there a third-party formatter that handles generics correctly?

-

On 9/30/2024 at 3:27 PM, Anders Melander said:...once they have signed in with an USB token.

I don't think you can get around the requirement for the signer to have some kind of physical identification device.

That's not what I read:

"DigiCert KeyLocker is a cloud-based service that helps you generate and store the private key without a physical HSM (Hardware Security Module). It was developed to reduce certificate administrators’ efforts and strengthen private key security."

(https://signmycode.com/blog/what-is-digicert-keylocker-everything-to-know-about)

-

6 hours ago, Vincent Parrett said:No, that would be terribly wasteful - we calculate the digest on the client and send that to the server to be signed.

I'm very interested!

-

1 minute ago, Anders Melander said:Hmm. Okay.

I obviously don't know how your build server setup was, or what build system you used, but it should have been possible to completely isolate the different projects.

Independent projects, with different developers, tools, etc. on the same build server is nothing out of the ordinary.

Anyway, if you are working on different projects, and don't want a centralized solution, then why not just use different certificates?

The certificate is on the company name., having multiple ones would multiply the costs.

I'm just reading up on Digicert Keylocker, which appears to be a cloud-based solution. If I understand correctly, Digicert keeps the USB device with the certificate and users can access it remotely.

https://signmycode.com/blog/what-is-digicert-keylocker-everything-to-know-about

https://www.digicert.com/blog/announcing-certcentrals-new-keylocker

-

2 minutes ago, Anders Melander said:There's your problem.

You should use a single central build server instead of delegating the build task to individual developers. If you don't have a central server which can function as a build server, at least designate one of the developers as the "build master".

We moved away from a build server several years ago; we work on several independent projects and kept getting in each other's way.

-

I have updated my Delphi 12.1 Enterprise to version 12.2, selecting the Windows, Android and Linux platforms in the installer.

But the Linux platform seems to be missing in the IDE and fmxLinux is unavailable in GetIt.

Am I missing something?

-

Hello all,

We're a small company, our Delphi developer team works largely remotely and I'm one of the members who actually lives in a different country from the rest of the team.

We must frequently release updates of our various software products. Each team member uses Finalbuilder and Signtool to automate the process of compiling, code signing and generating setups. Some of our products consist of dozens of executables and dll's so the automation of the build-and-sign process is a must-have.

Our Digicert EV code certificate expires in February 2025.

We're now faced with the problem that certificate providers seem to expect you to have the certificate on a USB device which is kinda unpractical if developers work remotely from different countries. We need common access to the certificate and we need to be able to automate the signing process.

I'd very much like to hear from other developer teams who are in the same boat, how they tackle this problem.

-

2

2

-

-

Same here. Works in 32-bit, does not work in 64-bit.

-

1 hour ago, David Schwartz said:What you want is a circular buffer that points to buffers in a memory pool if they're all the same size, or pools of different sizes if they vary. The pool pre-allocates (static) buffers that are re-used, so no fragmentation occurs.

Memory fragmention is not your biggest issue, it's atomic reads and writes, or test-and-sets to avoid corruption and gridlock from race conditions.

Access to the queue is only done by a single thread. A circular buffer is not handy because this queue keeps growing until a certain condition is met. Then a number of elements are removed from the beginning of the queue until the condition is no longer met.

-

1 hour ago, Kas Ob. said:Don't worry there, as long you are using FastMM (or the default memory manager, they are the same) , there is no memory fragmentation, in fact it is impossible to fragment the memory when using FastMM with one allocation, these lists/arrays... are one allocation from the point of view of MM, hence however you operate on them like delete and append, it always one continuous allocation.

My worry is, whenever I delete an element at the beginning, would the RTL just allocate a new block and copy the old array minus the first element to the new location? .

-

Hello all,

I have the situation where I have a dynamic array containing small fixed-size records (17 bytes in size, no managed types).

The array behaves like a queue, new elements get appended at the end, old elements get removed from the start. This happens 24/7 and the application is mission critical. There may be anything from 0-5000 elements in the array. My logic for appending is simply "MyArray:=MyArray+[element]" and my logic for removing the oldest element is simply "Delete(MyArray,0, 1)".

I'm worried that there might be a risk of excessive memory fragmentation and I'd like to hear your opinions on this. Thanks in advance!

-

-

Hello all,

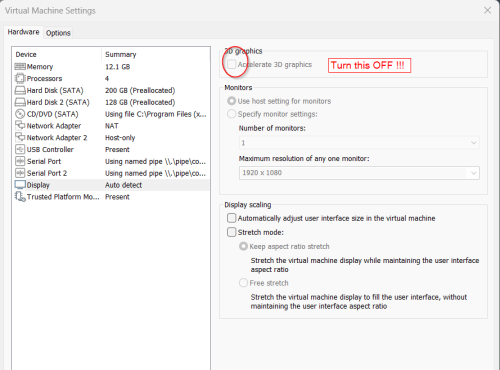

I am using Delphi 11 & 12 inside a VMWare Workstation 17 Pro VM which currently runs Windows 11. This VM has the VMWare tools installed. This setup used to work extremely well but since about 6 weeks I notice that VCL drawing is abnormally slow, both inside the Delphi IDE itself and inside my own programs. This slowness only happens in a VM running Windows 11, it does not happen in other VM's running Windows 10.

I attach a GIF file here. It shows what happens when I switch the Delphi 11 IDE between code view and form view. The form is just a simple tFrame with a groupbox and some checkboxes, nothing fancy.

You can literally see the checkboxes being drawn. I have already tried a "repair installation" of the operating system but that fixes nothing.

I'd be grateful if anyone has an idea what might be the cause of this.

-

On 6/27/2024 at 11:49 AM, #ifdef said:Just out of interest, what tool do you use to create GIF's like this?

-

15 hours ago, balabuev said:Did you know that the following will automatically work with TDictionary:

type TMyKey = record public class operator Equal(const A, B: TMyKey): Boolean; function GetHashCode: Integer; end;

Without the need to write custom equality comparer. At least in Delphi 12 it works. And System.TypeIfo unit contains GetRecCompareAddrs function, which is used in default equality comparer implementation. I realized it only recently, and see no docs/info on the internet.

Hey, that's actually really cool!

-

You could put a tScrollbox on your form and put the rest of your components on top of that. The scrollbox can be larger than the form itself and you can simply use the scrollbars to scroll the components into view.

-

2 hours ago, Cristian Peța said:procedure Foo; stdcall; begin SetFPCR; ... RestoreFPCR; end;

Do you think is so cumbersome to do this for every exposed function?

The SetFPCR and RestoreFPCR you need to write for yourself but only once.

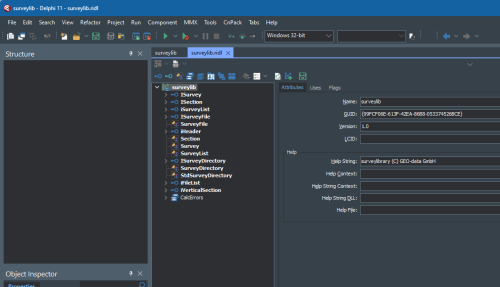

It would be a can of worms for me I'm afraid. I am thinking of all my Delphi COM DLL's that expose interfaces and class factories (see image). Each interface is basically an object that can have dozens of methods so we're not talking about just a few functions, it's more like hundreds of exposed methods. And many of these methods call each other, which complicates matters further because setfpcr/restorefpcr would have to support nesting. And multi-threading would make matters even more complicated.

-

11 minutes ago, David Heffernan said:One very obvious problem is that you need to write code like this everywhere. Not just at the top level. I stand by my advice before. Either:

1. Make FPCR part of the contract, or

2. Take control on entry, and restore on exit.

"Take control on entry and restore on exit" would be very cumbersome in the case of DLL's written in Delphi. It would need to be done in every exposed function / method.

(edit) or at least in every method that has to do with FP calculations.

![Delphi-PRAXiS [en]](https://en.delphipraxis.net/uploads/monthly_2018_12/logo.png.be76d93fcd709295cb24de51900e5888.png)

Strict type checking for tObject.

in RTL and Delphi Object Pascal

Posted

Hello all,

I saw how FreeAndNil is implemented in the RTL using a "CONST [ref]" parameter and then a little "tweak" is used to NIL the variable being referenced. I wondered why the heck the object wasn't simply passed as a VAR parameter in the first place. Until I tried that out and found that it wouldn't compile. Delphi insists that you either pass the exact object type or use a typecast.

What I don't understand is the reason why Delphi is so strict here; if a class derives from tObject, it still has all the original stuff in place from its ancestor so you can't really "break" anything by treating it as a tObject, so why is Delphi so strict? It feels counter-intuitive.