Renate Schaaf

-

Content Count

136 -

Joined

-

Last visited

-

Days Won

5

Posts posted by Renate Schaaf

-

-

14 hours ago, chmichael said:HEIF files containing HEVC-encoded images are also known as HEIC files. Such files require less storage space than the equivalent quality JPEG.

HEIF is a container format like .mp4, as far as I see Windows manages this file format via WICImage only. MFPack contains headers for this, but all that goes a bit over my head.

If you want to test HEVC-compression, you can do this via BitmapsToVideoWMF by creating an .mp4-file with just one frame using the procedures below.

This is anything but fast, because of the initialization/finalization of Mediafoundation taking a long time.

A quick test compresses a .jpg of 2.5 MB taken with my digital camera to an .mp4 of 430 KB. No quality loss visible at first glance.

uses VCL.Graphics, uTools, uTransformer, uBitmaps2VideoWMF; procedure EncodeImageToHEVC(const InputFilename, OutputFileName: string); var wic: TWicImage; bm: TBitmap; bme: TBitmapEncoderWMF; begin Assert(ExtractFileExt(OutputFileName) = '.mp4'); wic := TWicImage.Create; try bm := TBitmap.Create; try wic.LoadFromFile(InputFilename); WicToBmp(wic, bm); bme := TBitmapEncoderWMF.Create; try // Make an .mp4 with one frame. // Framerate 1/50 would display it for 50sec bme.Initialize(OutputFileName, bm.Width, bm.Height, 100, 1 / 50, ciH265); bme.AddFrame(bm, false); finally bme.Free; end; finally bm.Free end; finally wic.Free; end; end; procedure DecodeHEVCToBmp(const mp4File: string; const Bmp: TBitmap); var vi: TVideoInfo; begin vi := uTransformer.GetVideoInfo(mp4File); GetFrameBitmap(mp4File, Bmp, vi.VideoHeight, 1); end;-

1

1

-

-

1 hour ago, chmichael said:I was hoping i could use H264/5 hardware encoder/decoder for a single image. It should be faster and smaller than turbo-jpeg.

This is why that makes no sense (quoted from https://cloudinary.com/guides/video-formats/h-264-video-encoding-how-it-works-benefits-and-9-best-practices)

"H.264 uses inter-frame compression, which compares information between multiple frames to find similarities, reducing the amount of data needed to be stored or transmitted. Predictive coding uses information from previous frames to predict the content of future frames, further reducing the amount of data required. These and other advanced techniques enable H.264 to deliver high-quality video at low bit rates. "

-

15 hours ago, chmichael said:Hardware encode only 1 image ?

Do you mean to Jpeg or Png? A video-format would not make any sense for a single image.

NVidea-chips can apparently do that, but I don't know of any Windows-API which supports that.

If you want to encode to Png or Jpeg a bit faster than with TPngImage or TJpegImage use a TWicImage.

Look at this nice post on Stackoveflow for a way to set the compression quality for Jpeg using TWicImage:

https://stackoverflow.com/questions/42225924/twicimage-how-to-set-jpeg-compression-quality

-

1

1

-

-

I'll see what I can do. Stream might be tricky, I would need to learn more about this.

-

1

1

-

-

New version at https://github.com/rmesch/Parallel-Bitmap-Resampler:

Has more efficient code for the unsharp-mask, and I added more comments in code to explain what I'm doing.

Procedures with explaining comments:

uScaleCommon.Gauss

uScaleCommon.MakeGaussContributors

uScaleCommon.ProcessRowUnsharp

and see type TUnsharpParameters in uScale.pas.

Would it be a good idea to overload the UnsharpMask procedure to take sigma instead of radius as a parameter? Might be easier for comparison to other implementations.

-

1

1

-

-

9 hours ago, Anders Melander said:I have a benchmark suite that compares the performance and fidelity of 8 different implementations. I'll try to find time to integrate your implementation into it.

Hi Anders,

It's great that you think of it, but hold off on that for a bit. I noticed that I compute the weights in a horrendously stupid way. The weights are mostly identical, it's not like when you resample, dumb me. So taking care of that reduces memory usage by a lot and the subsequent application of the weights becomes much faster.

I've also changed the sigma-to-radius ratio a bit according to your suggestion. I find it hard to make results look nice with cutoff at half the max-value, I changed it to 10^-2 times max-value. But this still allows for smaller radii, and it becomes again a bit faster.

So, before you do anything I would like to finish these changes, and also comment the code a bit more. (Forces me to really understand what I'm doing 🙂

-

43 minutes ago, Anders Melander said:But you have a ratio of 0.5

I took sigma = 0.2*Radius, but it's easy to change that to something more common. I just took a value for which the integral is very close to 1. With respect to other implementations, I'm ready to learn. I just implemented it as accurately as I could think of without being overly slow. Performance is quite satisfying to me, but I bet with your input it'll get faster 🙂

-

I just uploaded a new version to https://github.com/rmesch/Parallel-Bitmap-Resampler

Newest addition: a parallel unsharp-mask using Gaussian blur. Can be used to sharpen or blur images.

Dedicated VCL-demo "Sharpen.dproj" included. For FMX the effect can be seen in the thumbnail-viewer-demo (ThreadsInThreadsFMX.dproj).

This is for the "modern" version, 10.4 and up.

I haven't ported the unsharp-mask to the legacy version (Delphi 2006 and up) yet, requires more work, but I plan on doing so.

Renate

-

22 hours ago, chmichael said:Just curious, anyone tried Skia for resampling ?

I did a quick test with the demo of the fmx-version of my resampler, just doing "Enable Skia" on the project.

In the demo I compare my results to TCanvas.DrawBitmap with HighSpeed set to false.

I see that the Skia-Canvas is being used, and that HighSpeed=False results in Skia-resampling set to

SkSamplingOptionsHigh : TSkSamplingOptions = (UseCubic: True; Cubic: (B: 1 / 3; C: 1 / 3); Filter: TSkFilterMode.Nearest; Mipmap: TSkMipmapMode.None);So, some form of cubic resampling, if I see that right.

Result:

Timing is slightly slower than native fmx-drawing, but still a lot faster than my parallel resampling.

I see no improvement in quality over plain fmx, which supposedly uses bilinear resampling with this setting.

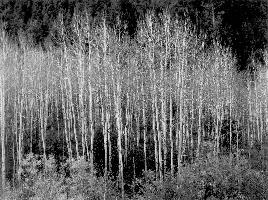

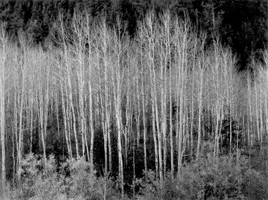

Here are two results: (How do you make this browser use the original pixel size, this is scaled!)

This doesn't look very cubic to me. As a comparison, here are the results of my resampler using the bicubic filter:

I might not have used Skia to its most favorable advantage.

Renate

-

2

2

-

-

9 minutes ago, Anders Melander said:I'm guessing they used "cooked" jpegs because there's really not much magic that can be done here.

OK, I'll stop thinking about it. Time to get some sleep:)

-

Hi Anders,

Thanks for explaining. I had a feeling that the compression is too "global" for parallelizing. But ..

From what I have meanwhile read, it seems that parts of the decompression could be done in parallel.

This link is about compression, but couldn't it apply to decompression too? (not that I know anything about it 🙂

Anyway, there are research papers which claim that they got a speedup from doing the decoding partly in parallel.

-

6 hours ago, FreeDelphiPascal said:Hi. Do you have something similar but for parallel jpeg decoding?

Sorry, no, but it sounds like a good idea. Naively. I have no idea how parallelizable jpeg-decoding is 🙂

-

38 minutes ago, David Schwartz said:I don't think you can load any components into the CE versions

Sure you can.

-

The use of TVirtualImageList is totally unessential, just cosmetics. I have replaced it by the old TImageList now. Hope it works, and thanks!

-

4 hours ago, Kas Ob. said:The problem in such calculation with that your are not using audio sample rate and the ability to divide the audio sample right, so my suggestion is to to round that fSampleDuration to what audio sample allow you and will be acceptable to the encoder and of course to the decoder in later stage.

I think I know what you mean, I'll play around with it some. Problem is that I really need to have a well defined video-frame-rate, otherwise the user can't time the input. I have meanwhile figured out the audio-sample-duration for given sample-rate and use it for the amount of audio-samples to be read ahead. Audio-sync is now acceptable to me. Problem is now, that some decoders are sloppyly implemented in Windows, like .mpg.

4 hours ago, Kas Ob. said:the audio sample per frame should always be even number.

I'm not sure I even have control over that. Isn't the encoder just using everything that is in the leaky bucket to write the segments in what it thinks is the right way?

Why can't you compile the code? I think your feedback would be very valuable to me. Did you try the latest version on GitHub?

https://github.com/rmesch/Bitmaps2Video-for-Media-Foundation

(there's one silly bit of code still in there that I need to take out, but it usually doesn't hurt anything)

I was hoping to have eliminated some code that prevented it to run on versions earlier than 11.3. Or have you meanwhile developed an aversion against the MF-headers? 🙂

-

1

1

-

-

A first version of TBitmapEncoderWMF is now available at

https://github.com/rmesch/Bitmaps2Video-for-Media-Foundation

It only supports .mp4 and codecs H264 and HEVC(H265) at the moment. See the readme for more details.

I had some problems getting the video-stream to use the correct timing. Apparently the encoder needs to have the video- and audio-samples fed to it in just the right way,

otherwise it drops frames or changes timestamps at its own unfathomable judgement.

I think I solved it by artificially slowing down the procedure that encodes the same frame repeatedly, and by reading ahead in the audio-file for "just the right amount".

Would be interested in how it fares on other systems than mine.

-

1 hour ago, Attila Kovacs said:I have SO account but as far as I'm concerned, you can strike for a lifetime.

What makes you so negative about this? I certainly have my problems with participation on stackoverflow. After having received some unfair downvotes, I've stopped asking questions, and I only answer, if I'm dead sure of what I say, maybe a good thing.

But stackoverflow has been a valuable and mostly reliable source of information, and I would have to spend much more time finding good info, if I couldn't rely on content being mostly accurate anymore, or it the moderators would stop doing their job. I upvote every answer that has been useful, and I downvote every blatantly wrong answer, just to keep the quality up. I would hate for ChatGPT flooding the answers.

-

3

3

-

-

10 hours ago, maXcomX said:However an av will never happen unless the API is wrong translated or when you use sloppy code.

Well, I must have written lots of sloppy code yesterday, but I can now encode audio to AAC together with video to H264 or H265. I'm using the CheckFail approach wherever possible. I wish I could keep all these attribute names in my head. Wonder whether it would be possible collecting them into records, so you could consult code completion about them.

-

1 hour ago, maXcomX said:Learn C++ I would say.

No thanks 🙂. But I've already translated parts of C++-code to Delphi. They could use stuff like Break_On_Fail(hr) and more, made me a bit jealous.

You wouldn't per chance know why I can't mux any audio into an HEVC-encoded video? The video stream is all there, but it seems to be missing the correct stream header. So only the audio is being played.

-

You are right. But then I have to think about whether or not a call is likely to produce havoc, and declare several new variables.

BTW: WriteSample pushes the sample to the sinkwriter and does not just set an index.

What do you think about the following construct instead:

procedure TBitmapEncoderWMF.WriteOneFrame; var pSample: IMFSample; Count: integer; const ProcName = 'TBitmapEncoderWMF.WriteOneFrame'; procedure CheckFail(hr: HResult); begin inc(Count); if not succeeded(hr) then raise Exception.Create('Fail in call nr. ' + IntToStr(Count) + ' of ' + ProcName + ' with result ' + IntToStr(hr)); end; begin Count := 0; // Create a media sample and add the buffer to the sample. CheckFail(MFCreateSample(pSample)); CheckFail(pSample.AddBuffer(pSampleBuffer)); CheckFail(pSample.SetSampleTime(fWriteStart)); CheckFail(pSample.SetSampleDuration(fSampleDuration)); // Send the sample to the Sink Writer. CheckFail(pSinkWriter.WriteSample(fstreamIndex, pSample)); inc(fWriteStart, fSampleDuration); fVideoTime := fWriteStart div 10000; inc(fFrameCount); end; -

What if hrSampleBuffer is not S_Ok, causing pSinkWriter.WriteSample to fail with an AV or such? You would get an exception the source of which would be harder to trace.

Or am I just too dense to understand :). (It's late)

-

1 hour ago, Anders Melander said:I would suggest you add it as a Git submodule.

Thanks, sounds like it's just the thing needed. Just need to figure out how.

Error: uToolsWMF uses a unit Z_prof. Entry can be safely deleted. Comes from having too much stuff in the path.

Meanwhile I think I figured out how to improve the encoding quality.

Around line 270 in uBitmaps2VideoWMF.pas make the following changes:

if succeeded(hr) then hr := MFCreateAttributes(attribs, 4); //<--------- change to 4 here // this enables hardware encoding, if the GPU supports it if succeeded(hr) then hr := attribs.SetUINT32(MF_READWRITE_ENABLE_HARDWARE_TRANSFORMS, UInt32(True)); // this seems to improve the quality of H264 and H265-encodings: {*************** add this *********************************} // this enables the encoder to use quality based settings if succeeded(hr) then hr := attribs.SetUINT32(CODECAPI_AVEncCommonRateControlMode, 3); {**************** /add this *******************************} if succeeded(hr) then hr := attribs.SetUINT32(CODECAPI_AVEncCommonQuality, 100); if succeeded(hr) then hr := attribs.SetUINT32(CODECAPI_AVEncCommonQualityVsSpeed, 100);Besides, I'm getting sick and tired of all those if succeeded...

-

I have worked on a port of my Bitmaps2Video-encoder to using Windows Media Foundation instead of ffmpeg, since I wanted to get rid of having to use all those dll's.

Now, before posting it on GitHub, I'd like to run it by the community because of my limited testing possibilies.I also hope that there are people out there having more experience with MF and could give some suggestions on the problems remaining (see below).

Learning how to use media foundation certainly almost drove me nuts several times, because of the poor quality of the documentation and the lack of examples.What is does:

Encodes a series of bitmaps to video with the user interface only requiring basic knowledge about videos.

Can do 2 kinds of transitions between bitmaps as an example of how to add more.

Supports file formats .mp4 with encoders H264 or H265, or .wmv with encoder WMV3.

Does hardware encoding, if your GPU supports it, falls back to software encoding otherwise.

Uses parallel routines wherever that makes sense.

Experimental routine to mux in mp3-audio. Only works for H264 and WMV3 right now.Requirements:

VCL-based.

Needs the excellent MF headers available at https://github.com/FactoryXCode/MfPack.

Add the src-folder of MFPack to the library path, no need to install a package.

Needs to run on Windows10 or higher to make use of all features.

Not sure about Delphi-version required, guess XE3 and up is required for sure.Problems remaining:

I'm not too thrilled about the encoding quality. Might be a problem with my nVidia-card.

The audio-muxer should work for H265, because it works when I use ffmpeg. But with my present routine the result just plays the audio and shows no video.

I haven't yet figured out how to insert video clips. Major problem I see is adjusting the frame rate.Renate

-

2

2

-

-

14 hours ago, Remy Lebeau said:You don't need to scan the full 2nd level right away, though.

Good idea. Going back to FindFirst/FindNext might have been the better design right from the beginning, but right now I'm satisfied with the speed. I just had to fix the VCL-tree, because I forgot that the darn thing recreates its Window-handle on any DPI-change. Arghh!

![Delphi-PRAXiS [en]](https://en.delphipraxis.net/uploads/monthly_2018_12/logo.png.be76d93fcd709295cb24de51900e5888.png)

Bitmaps2Video for Windows Media Foundation

in I made this

Posted

But you would have to tell the encoder that every frame is a key-frame, otherwise it only stores the differences. But it can be done ...

Also, the access to the frames for decoding needs to be sped up. But you could this way create a stream of custom-compressed images.

Of course, no other app would be able to use this format.