Jud

-

Content Count

153 -

Joined

-

Last visited

Posts posted by Jud

-

-

That is what Delphi Basics says, and it appears to be right. OTOH, if in the Delphi IDE you do F1 for help on BlockWrite (or BlockRead), it says that it is an integer function, and the source code for the system unit shows it as an integer function.

-

1 hour ago, David Heffernan said:Depending on what you do when you query these bits you may find that the memory is the bottleneck and threading won't help. Did you do any benchmarking yet?

Also if performance matters then delphi is invariably not the answer.

Actually I did benchmarking. The first version of this program read a 30GB boolean array from a drive and used that. Naturally I did a single-thread before multithreading. I forgot the ratio, but 20 threads on a 12th generation i7 was something like 8-9x faster than the single-thread version. Now I'm working on replacing the 30GB boolean array with a much larger bit vector.

-

49 minutes ago, David Heffernan said:Depending on what you do when you query these bits you may find that the memory is the bottleneck and threading won't help. Did you do any benchmarking yet?

Also if performance matters then delphi is invariably not the answer.

No benchmarking with the bit vector yet, but I've definitely encountered the memory bottleneck. In old tests with 8 threads (hyperthreaded 4-core i7), I would get about 5.5x over a single thread on CPU-intensive tasks. But on tests that were memory-intensive, I would get around 3x on 16 threads.

-

9 hours ago, David Heffernan said:unit Bitset; interface uses SysUtils, Math; type TBitSet = record private FBitCount: Int64; FSets: array of set of 0..255; class function SetCount(BitCount: Int64): Int64; static; procedure MakeUnique; procedure GetSetIndexAndBitIndex(Bit: Int64; out SetIndex: Int64; out BitIndex: Integer); function GetIsEmpty: Boolean; procedure SetBitCount(Value: Int64); function GetSize: Int64; public class operator In(const Bit: Int64; const BitSet: TBitSet): Boolean; class operator Equal(const bs1, bs2: TBitSet): Boolean; class operator NotEqual(const bs1, bs2: TBitSet): Boolean; property BitCount: Int64 read FBitCount write SetBitCount; property Size: Int64 read GetSize; property IsEmpty: Boolean read GetIsEmpty; procedure Clear; procedure IncludeAll; procedure Include(const Bit: Int64); procedure Exclude(const Bit: Int64); end; implementation { TBitSet } procedure TBitSet.MakeUnique; begin // this is used to implement copy-on-write so that the type behaves like a value SetLength(FSets, Length(FSets)); end; procedure TBitSet.GetSetIndexAndBitIndex(Bit: Int64; out SetIndex: Int64; out BitIndex: Integer); begin Assert(InRange(Bit, 0, FBitCount-1)); SetIndex := Bit shr 8; // shr 8 = div 256 BitIndex := Bit and 255; // and 255 = mod 256 end; function TBitSet.GetIsEmpty: Boolean; var i: Int64; begin for i := 0 to High(FSets) do begin if FSets[i]<>[] then begin Result := False; Exit; end; end; Result := True; end; procedure TBitSet.SetBitCount(Value: Int64); var Bit, BitIndex: Integer; SetIndex: Int64; begin if (Value<>FBitCount) or not Assigned(FSets) then begin Assert(Value>=0); FBitCount := Value; SetLength(FSets, SetCount(Value)); if Value>0 then begin (* Ensure that unused bits are cleared, necessary give the CompareMem call in Equal. This also means that state does not persist when we decrease and then increase BitCount. For instance, consider this code: var bs: TBitSet; ... bs.BitCount := 2; bs.Include(1); bs.BitCount := 1; bs.BitCount := 2; Assert(not (1 in bs)); *) GetSetIndexAndBitIndex(Value - 1, SetIndex, BitIndex); for Bit := BitIndex + 1 to 255 do begin System.Exclude(FSets[SetIndex], Bit); end; end; end; end; function TBitSet.GetSize: Int64; begin Result := Length(FSets)*SizeOf(FSets[0]); end; class function TBitSet.SetCount(BitCount: Int64): Int64; begin Result := (BitCount + 255) shr 8; // shr 8 = div 256 end; class operator TBitSet.In(const Bit: Int64; const BitSet: TBitSet): Boolean; var SetIndex: Int64; BitIndex: Integer; begin BitSet.GetSetIndexAndBitIndex(Bit, SetIndex, BitIndex); Result := BitIndex in BitSet.FSets[SetIndex]; end; class operator TBitSet.Equal(const bs1, bs2: TBitSet): Boolean; begin Result := (bs1.FBitCount=bs2.FBitCount) and CompareMem(Pointer(bs1.FSets), Pointer(bs2.FSets), bs1.Size); end; class operator TBitSet.NotEqual(const bs1, bs2: TBitSet): Boolean; begin Result := not (bs1=bs2); end; procedure TBitSet.Clear; var i: Int64; begin MakeUnique; for i := 0 to High(FSets) do begin FSets[i] := []; end; end; procedure TBitSet.IncludeAll; var i: Int64; begin for i := 0 to BitCount-1 do begin Include(i); end; end; procedure TBitSet.Include(const Bit: Int64); var SetIndex: Int64; BitIndex: Integer; begin MakeUnique; GetSetIndexAndBitIndex(Bit, SetIndex, BitIndex); System.Include(FSets[SetIndex], BitIndex); end; procedure TBitSet.Exclude(const Bit: Int64); var SetIndex: Int64; BitIndex: Integer; begin MakeUnique; GetSetIndexAndBitIndex(Bit, SetIndex, BitIndex); System.Exclude(FSets[SetIndex], BitIndex); end; end.This is based on code of mine that has is limited to integer bit count. I've not tested it extended to Int64, but I'm sure anyone that wanted to use random code like this would test.

That looks like what I'm looking for!!! I'm going to try it shortly. Thanks!

-

9 hours ago, David Heffernan said:unit Bitset; interface uses SysUtils, Math; type TBitSet = record private FBitCount: Int64; FSets: array of set of 0..255; class function SetCount(BitCount: Int64): Int64; static; procedure MakeUnique; procedure GetSetIndexAndBitIndex(Bit: Int64; out SetIndex: Int64; out BitIndex: Integer); function GetIsEmpty: Boolean; procedure SetBitCount(Value: Int64); function GetSize: Int64; public class operator In(const Bit: Int64; const BitSet: TBitSet): Boolean; class operator Equal(const bs1, bs2: TBitSet): Boolean; class operator NotEqual(const bs1, bs2: TBitSet): Boolean; property BitCount: Int64 read FBitCount write SetBitCount; property Size: Int64 read GetSize; property IsEmpty: Boolean read GetIsEmpty; procedure Clear; procedure IncludeAll; procedure Include(const Bit: Int64); procedure Exclude(const Bit: Int64); end; implementation { TBitSet } procedure TBitSet.MakeUnique; begin // this is used to implement copy-on-write so that the type behaves like a value SetLength(FSets, Length(FSets)); end; procedure TBitSet.GetSetIndexAndBitIndex(Bit: Int64; out SetIndex: Int64; out BitIndex: Integer); begin Assert(InRange(Bit, 0, FBitCount-1)); SetIndex := Bit shr 8; // shr 8 = div 256 BitIndex := Bit and 255; // and 255 = mod 256 end; function TBitSet.GetIsEmpty: Boolean; var i: Int64; begin for i := 0 to High(FSets) do begin if FSets[i]<>[] then begin Result := False; Exit; end; end; Result := True; end; procedure TBitSet.SetBitCount(Value: Int64); var Bit, BitIndex: Integer; SetIndex: Int64; begin if (Value<>FBitCount) or not Assigned(FSets) then begin Assert(Value>=0); FBitCount := Value; SetLength(FSets, SetCount(Value)); if Value>0 then begin (* Ensure that unused bits are cleared, necessary give the CompareMem call in Equal. This also means that state does not persist when we decrease and then increase BitCount. For instance, consider this code: var bs: TBitSet; ... bs.BitCount := 2; bs.Include(1); bs.BitCount := 1; bs.BitCount := 2; Assert(not (1 in bs)); *) GetSetIndexAndBitIndex(Value - 1, SetIndex, BitIndex); for Bit := BitIndex + 1 to 255 do begin System.Exclude(FSets[SetIndex], Bit); end; end; end; end; function TBitSet.GetSize: Int64; begin Result := Length(FSets)*SizeOf(FSets[0]); end; class function TBitSet.SetCount(BitCount: Int64): Int64; begin Result := (BitCount + 255) shr 8; // shr 8 = div 256 end; class operator TBitSet.In(const Bit: Int64; const BitSet: TBitSet): Boolean; var SetIndex: Int64; BitIndex: Integer; begin BitSet.GetSetIndexAndBitIndex(Bit, SetIndex, BitIndex); Result := BitIndex in BitSet.FSets[SetIndex]; end; class operator TBitSet.Equal(const bs1, bs2: TBitSet): Boolean; begin Result := (bs1.FBitCount=bs2.FBitCount) and CompareMem(Pointer(bs1.FSets), Pointer(bs2.FSets), bs1.Size); end; class operator TBitSet.NotEqual(const bs1, bs2: TBitSet): Boolean; begin Result := not (bs1=bs2); end; procedure TBitSet.Clear; var i: Int64; begin MakeUnique; for i := 0 to High(FSets) do begin FSets[i] := []; end; end; procedure TBitSet.IncludeAll; var i: Int64; begin for i := 0 to BitCount-1 do begin Include(i); end; end; procedure TBitSet.Include(const Bit: Int64); var SetIndex: Int64; BitIndex: Integer; begin MakeUnique; GetSetIndexAndBitIndex(Bit, SetIndex, BitIndex); System.Include(FSets[SetIndex], BitIndex); end; procedure TBitSet.Exclude(const Bit: Int64); var SetIndex: Int64; BitIndex: Integer; begin MakeUnique; GetSetIndexAndBitIndex(Bit, SetIndex, BitIndex); System.Exclude(FSets[SetIndex], BitIndex); end; end.This is based on code of mine that has is limited to integer bit count. I've not tested it extended to Int64, but I'm sure anyone that wanted to use random code like this would test.

-

If I do "Find Declaration" on BlockWrite, it goes to an integer function:

function _BlockWrite(var F: TFileRec; Buffer: Pointer; RecCnt: Integer; RecsWritten: PInteger): Integer;

-

11 hours ago, Rollo62 said:Like said, just an alternative idea, with pros and cons.

Only @Jud may know if this is workable for him somehow, or not.

I doubt that it would be workable, since they are natually one dimentional, and the program just needs to see if a bit is 1 or 0, and it needs to do a large number of them.

-

12 hours ago, David Heffernan said:What about the cost of moving data between gpu and main memory? Benefits of gpu are in highly parallel computation. Where is that in this scenario. Just saying GPU doesn't make something fast or efficient.

Interesting idea, but I don't know how to deal with the GPU. This will be running 20 instances in parallel, but the accesses will be pretty much random. The target calls for doing at least 10 trillion accesses.

-

17 hours ago, David Heffernan said:No. You use a hashed collection. Like a dictionary, but one without a value, which is known as a set.

But at 5% full then I don't think it will get you any benefit, because of the overhead of the hashed collection.

Performance is an issue. The plan is to do at least 10 trillion accesses to the bit vector - more if I can manage it.

-

15 hours ago, David Heffernan said:Show complete but minimal code please

Here is a short demo. With Delphi 11.3 I get a compiler error on the line with using BlockWrite as a function. The documentation says that BlockWrite is a function that returns an integer.

procedure ErrorDemo;

var CountNewData, WriteResult : integer;

OutFile : file;

NewData : array of int64;

begin

AssignFile( OutFile, 'c:\NewData.data');

rewrite( OutFile, 8);

WriteResult := BlockWrite( OutFile, NewData[ 0], CountNewData, WriteResult);

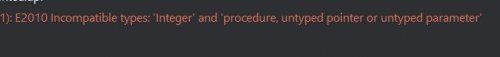

{ compiler error - E2010 incompatible types - integer and procedure }

BlockWrite( OutFile, NewData[ 0], CountNewData, WriteResult); // no error

CloseFile( OutFile);

end; -

39 minutes ago, Uwe Raabe said:Hard to say without testing. It is an intrinsic function, so we cannot see what is done internally.

It is a compiler error - not a runtime error.

-

This is the first time I'm using BlockRead and BlockWrite in Delphi 11,3. I've used them in previous versions. I thought they were functions, returning an integer. The help file says so and the source code says so. But I'm getting an E2010 error when trying to assign the result to an integer, e.g.

RecordsWritten := BlockWrite( OutFile, ...

Just calling it like a procedure works. I'm attaching a screenshot of the error message. Is this a bug or am I wrong?

-

3 hours ago, Uwe Raabe said:Wow! If my math is correct that needs more than 32GB of memory.

I have no idea what the purpose is, but perhaps there are other approaches to achieve the same.

1. 32GB is nothing today. Every computer here that it might be running on has at last 128GB of RAM.

2. No, this is the best approach.

-

32 minutes ago, David Heffernan said:How many such instances of this type do you need in memory at any time? And what's the expected number of bits that are set at any time? Do you really need to store all bits, both 0 and 1. Can't you just stor the 1s and infer the 0s from the fact that they aren't stored as 1s?

I estimate that about 5% of the bits will be 1s. They will be read-only in the actual run, so the data will be shared among 20 threads. I don't understand how storing just the 1s would work. That would result in a series of about 15,000,000 1s, but that would be useless.

-

10 hours ago, Der schöne Günther said:The maximum size TBits can be before bugging out is Integer.MaxValue - 31. That's roughly 256 Megabytes of consecutive boolean storage spage. You really need that much?

Yes, I need more. I current;y need about 300 billion bits. I can write it myself, but I thought there might be one already available.

-

TBits is still limited to 2^31 bits, even on the 64-bit platform. Is there a replacement for it that will allow > 2^32 bits (i.e. int64?)

(I could write one, but a lot of people are more efficient with this kind of thing than I,)

-

On 6/24/2023 at 4:01 AM, dummzeuch said:There are usually some tasks that do not need to run on the performance cores, so setting the affinity mask for the whole program may not be the best strategy, even though it's the easiest way. But I'm sure that sooner or later Windows will start ignoring those masks because everybody sets them.

Of course this is currently the only way to do that for threads generated using parallel for.

Well, I need all of the power I have available.

BUT - I realized a fallacy in my thinking and analysis. I assumed that if I ran 16 threads with the parallel for loop, they would all be on performance cores, and that if I ran 20 threads, the extra 4 would go to the efficient cores. But after more experimentation, parallel for seems to put the threads on any core. I thought of timing how long each thread took to run, and with, say 20 threads, there wasn't that much difference. And running 20 threads with parallel for (with 8 P=cores and 4 E-cores), the performance was 9-10% better than running 16 threads. So it is using the E-cores too. And running 100 or so threads, it pretty much evens out the difference between the E and P cores (because the ones on the P-cores finish sooner and get reassigned another thread.)

-

5 hours ago, DelphiUdIT said:You can try to call this:

function uCoreId: uint64; register; asm //from 10 gen it reads the IA32_TSC_AUX MSR which should theoretically //indicate the CORE (THREAD in the case of HyperThread processors) in which rdpid runs rdpid RAX; end;This function returns the ID of the core (means CORE THREAD) in wich rdpid runs. It works form Intel 10th generation CPU.

The first Core Thread is numbered 0 (zero).

You will see that also the efficients core will be sometimes used.

This is because the ThreadDirector allocates threads (meaning processes) based on various factors. The distribution is not predictable.

If you want to avoid using the efficient cores you have to use the affinity mask (for the whole program) and select only the performance cores.

P.S.: this is for WIN64 program.

That is what I'm using. I've done some testing with the parallel for, for different numbers of threads, say 1..16, 1..20, 1..100, 1..500, etc. All I used was the time to complete the run, with each task being the same size. It seems that if I'm running 20 threads on a CPU with 8 performance cores and 4 efficient cores, it seems to be assigning the tasks across all CPUs. But if the number of tasks gets into the hundreds, it is assigning tasks to the efficient cores, but with a few hundred tasks, as the performance cores are resassigned when they finish while the efficient cores are still running. So when the number of tasks is in the hundreds (or more) it seems to naturally balance the load among the cores.

-

20 hours ago, dwrbudr said:Isn't that an OS job to do?

I don't know how it is handled, which is why I was asking.

-

Recent models of Intel processors (12th and 13th generations) have Performance cores and Efficient cores. How does Delphi (11.3 in particular) handle this with the parallel for? Does it assign tasks only to performance cores or all cores?

-

Sorry for the delay, but that fixed it.

-

Thanks. After I had posted the message and two updates, I searched for the words in the error message and found that the problem had been answered:

"That is known (reported issue). There is some problem with migrating or applying Welcome screen layout after migration.

When you launch IDE click Edit Layout on Welcome Screen. Reset Welcome Screen to default layout and then adjust it again the way you like it.

Next time you start IDE it should run normally."

-

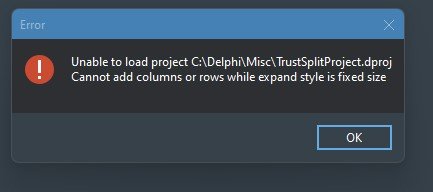

I also get this error when trying to start a new VCL project.

Can someone help?

-

I moved from an old computer to a new one, I get the following message about not being able to add columns or rows when I try to bring up a VCL project that worked on the old computer. I used the migration tool to copy my settings to the new computer.

How can this be fixed?

Also, I get a bunch of access errors after this.

PS - also, this seems to happen with every VCL project but not with console apps.

![Delphi-PRAXiS [en]](https://en.delphipraxis.net/uploads/monthly_2018_12/logo.png.be76d93fcd709295cb24de51900e5888.png)

BlockRead & BlockWrite - E2010 error

in RTL and Delphi Object Pascal

Posted · Edited by Jud

You may not have installed the help file and the source code for the units.