Kas Ob.

-

Content Count

579 -

Joined

-

Last visited

-

Days Won

10

Posts posted by Kas Ob.

-

-

33 minutes ago, dummzeuch said:If I remember correctly there is no way to access the compiler messages through the NTA because the output goes to a modified VirtualStringTree

I remember i already shared here in the forum a way to hook compiler messages, not OTA nor NTA, but good old fashion hooking.

3 hours ago, GabrielMoraru said:interact with the compilation/debugging system

interacting might be hard to very hard to pin, but getting compiler message(s) is doable, well, tested on few old IDEs, up to XE8, if that will help then i can search for that fun project and try to adjust it for you.

-

Here another and simpler example, try this interface with the above function

TInterfacedObjectFooBar = class(TInterfacedObject, IBar, IFoo) procedure DoSomething; end; TInterfacedObjectFooBarEx = class(TInterfacedObjectFooBar, IBar, IFoo) procedure DoSomething; end;

And its result

Implemented interfaces in TInterfacedObjectFooBar 0. GUID = {11111111-0000-0000-0000-000000000001} VMT addr: 004D3160 offest: 12 1. GUID = {22222222-0000-0000-0000-000000000002} VMT addr: 004D3170 offest: 16 Implemented interfaces in TInterfacedObject 0. GUID = {00000000-0000-0000-C000-000000000046} VMT addr: 00402358 offest: 8 Implemented interfaces in TInterfacedObjectFooBarEx 0. GUID = {11111111-0000-0000-0000-000000000001} VMT addr: 004D32F8 offest: 20 1. GUID = {22222222-0000-0000-0000-000000000002} VMT addr: 004D3308 offest: 24 Implemented interfaces in TInterfacedObjectFooBar 0. GUID = {11111111-0000-0000-0000-000000000001} VMT addr: 004D3160 offest: 12 1. GUID = {22222222-0000-0000-0000-000000000002} VMT addr: 004D3170 offest: 16 Implemented interfaces in TInterfacedObject 0. GUID = {00000000-0000-0000-C000-000000000046} VMT addr: 00402358 offest: 8So make sure you are looking at the right VMT, remember that TObject VMT order is irrelevant with its interfaces VMTs structure and order.

-

8 hours ago, Memnarch said:That is not right.

It is right.

8 hours ago, Memnarch said:My method, when using the IUnknown from System.pas, is still the fourth method in the table. All those interfaces declared in the API deriving from IUnknown expect to have their first method, being the fourth in the table, too. And on the Windows implementing side, it is exactly this. They derive from IUnknown, which has 3 methods and does not derive from anything else. So the windows interfaces start with 4 as their first method slot, just like any interface you write in Delphi.

However, given that IUnknown was just copied from the metadata during generation, in Delphi it derives from IInterface, which is Delphis "IUnknown". That way, it introduces 3 more methods to the table, making DoSomething of IFoo the 7th method. Therefore on the delphi side if you call DoSomething on the interface coming from a windows api class, you're off by 3 and call something entirely else.

OK, hold your horses here for second, and i want you to check if you are checking the VMT for the interfaces or something else like TObject, also are they named (with TGUID attached) ?

I recommend that you go to this blog post and and read this very article and study the output carefully, try to get how interface inheritance and their VMT works.

Here a modified example from the blog

program Project6; {$APPTYPE CONSOLE} uses Classes, SysUtils, TypInfo, ComObj; type IFoo = interface ['{11111111-0000-0000-0000-000000000001}'] procedure DoSomething; end; IBar = interface ['{22222222-0000-0000-0000-000000000002}'] procedure DoSomething; end; TFooBar = class(TObject, IUnknown, IFoo, IBar) function QueryInterface(const IID: TGUID; out Obj): HResult; stdcall; function _AddRef: Integer; stdcall; function _Release: Integer; stdcall; procedure DoSomething; end; TBarFoo = class(TObject, IBar, IFoo, IUnknown) function QueryInterface(const IID: TGUID; out Obj): HResult; stdcall; function _AddRef: Integer; stdcall; function _Release: Integer; stdcall; procedure DoSomething; end; TFooBarOnly = class(TObject, IFoo, IBar) function QueryInterface(const IID: TGUID; out Obj): HResult; stdcall; function _AddRef: Integer; stdcall; function _Release: Integer; stdcall; procedure DoSomething; end; TBarFooOnly = class(TObject, IBar, IFoo) function QueryInterface(const IID: TGUID; out Obj): HResult; stdcall; function _AddRef: Integer; stdcall; function _Release: Integer; stdcall; procedure DoSomething; end; procedure DumpInterfaces(AClass: TClass); var i: integer; InterfaceTable: PInterfaceTable; InterfaceEntry: PInterfaceEntry; begin while Assigned(AClass) do begin InterfaceTable := AClass.GetInterfaceTable; if Assigned(InterfaceTable) then begin writeln('Implemented interfaces in ', AClass.ClassName); for i := 0 to InterfaceTable.EntryCount - 1 do begin InterfaceEntry := @InterfaceTable.Entries[i]; Write(Format('%d. GUID = %s', [i, GUIDToString(InterfaceEntry.IID)])); Writeln(' VMT addr: ', IntToHex(Cardinal(InterfaceEntry.VTable), SizeOf(Pointer) * 2), ' offest: ', InterfaceEntry.IOffset); end; end; AClass := AClass.ClassParent; end; writeln; end; { TFooBar } procedure TFooBar.DoSomething; begin end; function TFooBar.QueryInterface(const IID: TGUID; out Obj): HResult; begin end; function TFooBar._AddRef: Integer; begin end; function TFooBar._Release: Integer; begin end; { TBarFoo } procedure TBarFoo.DoSomething; begin end; function TBarFoo.QueryInterface(const IID: TGUID; out Obj): HResult; begin end; function TBarFoo._AddRef: Integer; begin end; function TBarFoo._Release: Integer; begin end; { TBarFooOnly } procedure TBarFooOnly.DoSomething; begin end; function TBarFooOnly.QueryInterface(const IID: TGUID; out Obj): HResult; begin end; function TBarFooOnly._AddRef: Integer; begin end; function TBarFooOnly._Release: Integer; begin end; { TFooBarOnly } procedure TFooBarOnly.DoSomething; begin end; function TFooBarOnly.QueryInterface(const IID: TGUID; out Obj): HResult; begin end; function TFooBarOnly._AddRef: Integer; begin end; function TFooBarOnly._Release: Integer; begin end; begin DumpInterfaces(TComponent); DumpInterfaces(TComObject); DumpInterfaces(TComObjectFactory); DumpInterfaces(TFooBar); DumpInterfaces(TBarFoo); DumpInterfaces(TFooBarOnly); DumpInterfaces(TBarFooOnly); readln; end.

The output from my XE8

Implemented interfaces in TComponent 0. GUID = {E28B1858-EC86-4559-8FCD-6B4F824151ED} VMT addr: 0048C71C offest: 56 1. GUID = {00000000-0000-0000-C000-000000000046} VMT addr: 0048C72C offest: 60 Implemented interfaces in TComObject 0. GUID = {DF0B3D60-548F-101B-8E65-08002B2BD119} VMT addr: 004CF25C offest: 24 1. GUID = {00000000-0000-0000-C000-000000000046} VMT addr: 004CF26C offest: 28 Implemented interfaces in TComObjectFactory 0. GUID = {B196B28F-BAB4-101A-B69C-00AA00341D07} VMT addr: 004CF93C offest: 72 1. GUID = {00000001-0000-0000-C000-000000000046} VMT addr: 004CF93C offest: 72 2. GUID = {00000000-0000-0000-C000-000000000046} VMT addr: 004CF93C offest: 72 Implemented interfaces in TFooBar 0. GUID = {22222222-0000-0000-0000-000000000002} VMT addr: 004D2880 offest: 4 1. GUID = {11111111-0000-0000-0000-000000000001} VMT addr: 004D2890 offest: 8 2. GUID = {00000000-0000-0000-C000-000000000046} VMT addr: 004D2880 offest: 4 Implemented interfaces in TBarFoo 0. GUID = {00000000-0000-0000-C000-000000000046} VMT addr: 004D2AC4 offest: 4 1. GUID = {11111111-0000-0000-0000-000000000001} VMT addr: 004D2AC4 offest: 4 2. GUID = {22222222-0000-0000-0000-000000000002} VMT addr: 004D2AD4 offest: 8 Implemented interfaces in TFooBarOnly 0. GUID = {22222222-0000-0000-0000-000000000002} VMT addr: 004D2D08 offest: 4 1. GUID = {11111111-0000-0000-0000-000000000001} VMT addr: 004D2D18 offest: 8 Implemented interfaces in TBarFooOnly 0. GUID = {11111111-0000-0000-0000-000000000001} VMT addr: 004D2F34 offest: 4 1. GUID = {22222222-0000-0000-0000-000000000002} VMT addr: 004D2F44 offest: 8Now to simplify your miss understanding, if what you said about off by 3 then the position of the interface (order) in the declaration will matter, and that can't be, right ?

-

Hi,

6 hours ago, Memnarch said:So unless I am missing something crucial, this IUnknown-Interface is adding the same 3 methods it already has thorugh the IInterface-Baseinterface and offsets the entire VMT by 3 entries across the board.

And by adding/removing Windows.Foundation from my uses I can make the copy of the interface work/break at will. So this seems like an oversight and that IUnknown should just inherit from IInterface or be an alias, or am I wrong?VMT is not a problem here, VMT for such interfaces are different beast from VMT for objects/classes, VMT tables with COM interfaces (interfaces with GUID which i like to call "named interfaces" or "IDed interfaces") are separated into their own tables identified by their GUID, even for one object/interface, so VMT will be alright no matter what inheritance is there, also VMT for each interface are agnostic for other GUID declaration.

As for the interfaces you listed IInterface and IUnknown, this might be a problem as they declared with the same GUID (TGUID) hence they will compete to replace one another, they are identical in structure but different in parameters (declaration), so they will work unless the compiler will complain about stuff like Integer vs Cardinal or TGUID vs PGUID..., the problem here is how compiler see them and when.

I might be wrong here, but the fix should be removing Windows.Foundation.IUnknown , in other words, the already known interfaces should not be redeclared/generated.

-

Also i can't help here, but have question;

Are these repositories referring to the same QuickJS ?

-

3 minutes ago, Tommi Prami said:As I understand what they say, for some ypou would need multiple CPUs.

You for sure might have such a hardware.

Thanks for extra info.,..No sure i do understand that, but lets say on single core the test will yield similar result to the article, meaning Sleep(0) is magnitude slower than Sleep(1) and SwitchToThread, then that OS should not be working at all ! and if worked then it will be slower than computer in 70s and 80s, i mean less than 10Mhz CPU.

-

Spent two hours trying to reproduce anything close to that mentioned article https://joeduffyblog.com/2006/08/22/priorityinduced-starvation-why-sleep1-is-better-than-sleep0-and-the-windows-balance-set-manager/

I read that article years ago, many years, yet i couldn't reproduce anything even small hint if that is the case with Sleep(0) vs Sleep(1) vs SwitchToThread,

So i just wrote two tests, one is mimicking the starvation pointed and presented in the article producer/consumer, and the other just how efficient these three method.

Also to be clear, i am not saying the article is misleading or wrong, but it is most likely testing different thing completely (namely the efficiency of ThreadPool in C# in 2006), or the test is happening is single core CPU belongs to past and gone era, know this Sleep(1) by definition can't perform less than OS timer granularity which is by default 1000/64 seconds almost 15-16 ms, and in best case scenario it will be 1 ms, this is guaranteed by the OS, so the article result is irrelevant today.

First test

program ThreadIterationTest; {$APPTYPE CONSOLE} uses System.SysUtils, System.Classes, Winapi.Windows; {$WARN SYMBOL_PLATFORM OFF} type TSwitchMethod = (smSleep0, smSleep1, smSwitchToThread); TCounterThread = class(TThread) private FSwitchMethod: TSwitchMethod; FIterationCount: Int64; FDuration: Integer; procedure Execute; override; public constructor Create(SwitchMethod: TSwitchMethod; Duration: Integer; Priority: TThreadPriority); property IterationCount: Int64 read FIterationCount; property SwitchMethod: TSwitchMethod read FSwitchMethod; end; constructor TCounterThread.Create(SwitchMethod: TSwitchMethod; Duration: Integer; Priority: TThreadPriority); begin inherited Create(True); // Create suspended FSwitchMethod := SwitchMethod; FDuration := Duration; FIterationCount := 0; Self.Priority := Priority; FreeOnTerminate := True; end; procedure TCounterThread.Execute; var StartTick, Duration: Cardinal; begin Duration := FDuration * 1000; StartTick := GetTickCount; while (GetTickCount - StartTick) < Duration do begin Inc(FIterationCount); case FSwitchMethod of {(*} smSleep0: Sleep(0); smSleep1: Sleep(1); smSwitchToThread: SwitchToThread; {*)} end; end; end; function ThPriorityToString(ThProirity: TThreadPriority): string; var P: Integer; begin case ThProirity of {(*} tpIdle: Result:= 'Idle'; tpLowest: Result:= 'Lowest'; tpLower: Result:= 'Lower'; tpNormal: Result:= 'Normal'; tpHigher: Result:= 'Higher'; tpHighest: Result:= 'Highest'; tpTimeCritical: Result:= 'TimeCritical'; {*)} else Result := 'Unknown'; end; Result := Result + '('; case ThProirity of {(*} tpIdle: P:= THREAD_PRIORITY_IDLE; tpLowest: P:= THREAD_PRIORITY_LOWEST; tpLower: P:= THREAD_PRIORITY_BELOW_NORMAL; tpNormal: P:= THREAD_PRIORITY_NORMAL; tpHigher: P:= THREAD_PRIORITY_ABOVE_NORMAL; tpHighest: P:= THREAD_PRIORITY_HIGHEST; tpTimeCritical: P:= THREAD_PRIORITY_TIME_CRITICAL; {*)} else P := 999; end; Result := Result + IntToStr(P) + ')'; end; procedure RunTest(Duration: Integer; Priority1, Priority2, Priority3: TThreadPriority); var Thread1, Thread2, Thread3: TCounterThread; begin Writeln('Starting test with duration: ', Duration, ' seconds'); Writeln('Thread priorities: Sleep(0)=', ThPriorityToString(Priority1), ', Sleep(1)=', ThPriorityToString(Priority2), ', SwitchToThread=', ThPriorityToString(Priority3)); Thread1 := TCounterThread.Create(smSleep0, Duration, Priority1); Thread2 := TCounterThread.Create(smSleep1, Duration, Priority2); Thread3 := TCounterThread.Create(smSwitchToThread, Duration, Priority3); Thread1.Start; Thread2.Start; Thread3.Start; WaitForSingleObject(Thread1.Handle, INFINITE); WaitForSingleObject(Thread2.Handle, INFINITE); WaitForSingleObject(Thread3.Handle, INFINITE); Writeln('Results:'); Writeln('Sleep(0) iterations: ', Thread1.IterationCount); Writeln('Sleep(1) iterations: ', Thread2.IterationCount); Writeln('SwitchToThread iterations: ', Thread3.IterationCount); Writeln; end; begin try Writeln('Test 1: All threads with normal priority'); RunTest(3, tpNormal, tpNormal, tpNormal); Writeln('Test 1.1: All threads with normal priority'); RunTest(1, tpNormal, tpNormal, tpNormal); Writeln('Test 1.5: All threads with normal priority'); RunTest(5, tpNormal, tpNormal, tpNormal); Writeln('Test 2: Different priorities'); RunTest(5, tpHigher, tpNormal, tpLower); Writeln('Test 3: Different priorities'); RunTest(5, tpLowest, tpHighest, tpNormal); Writeln('Test 4: Different priorities'); RunTest(5, tpLowest, tpLowest, tpLowest); Writeln('Done.'); Readln; except on E: Exception do Writeln(E.ClassName, ': ', E.Message); end; end.

Its result on my device

Test 1: All threads with normal priority Starting test with duration: 3 seconds Thread priorities: Sleep(0)=Normal(0), Sleep(1)=Normal(0), SwitchToThread=Normal(0) Results: Sleep(0) iterations: 15700875 Sleep(1) iterations: 175 SwitchToThread iterations: 19869985 Test 1.1: All threads with normal priority Starting test with duration: 1 seconds Thread priorities: Sleep(0)=Normal(0), Sleep(1)=Normal(0), SwitchToThread=Normal(0) Results: Sleep(0) iterations: 5266693 Sleep(1) iterations: 60 SwitchToThread iterations: 6658333 Test 1.5: All threads with normal priority Starting test with duration: 5 seconds Thread priorities: Sleep(0)=Normal(0), Sleep(1)=Normal(0), SwitchToThread=Normal(0) Results: Sleep(0) iterations: 26351894 Sleep(1) iterations: 269 SwitchToThread iterations: 33344803 Test 2: Different priorities Starting test with duration: 5 seconds Thread priorities: Sleep(0)=Higher(1), Sleep(1)=Normal(0), SwitchToThread=Lower(-1) Results: Sleep(0) iterations: 26332342 Sleep(1) iterations: 299 SwitchToThread iterations: 33324362 Test 3: Different priorities Starting test with duration: 5 seconds Thread priorities: Sleep(0)=Lowest(-2), Sleep(1)=Highest(2), SwitchToThread=Normal(0) Results: Sleep(0) iterations: 26220753 Sleep(1) iterations: 299 SwitchToThread iterations: 33216074 Test 4: Different priorities Starting test with duration: 5 seconds Thread priorities: Sleep(0)=Lowest(-2), Sleep(1)=Lowest(-2), SwitchToThread=Lowest(-2) Results: Sleep(0) iterations: 26350390 Sleep(1) iterations: 291 SwitchToThread iterations: 33374685 Done.Sleep(1) is in the expected range of 60-64 per second

Now different test to emulate the article example, not using Delphi RTL thread pool and anonymous thread, because i never trust them on my XE8.

program ThreadStarvationTest; {$APPTYPE CONSOLE} uses System.SysUtils, System.Classes, Winapi.Windows; {$WARN SYMBOL_PLATFORM OFF} type TYieldMethod = (ymSleep0, ymSleep1, ymSwitchToThread); TStarvationThread = class(TThread) private FYieldMethod: TYieldMethod; FIsProducer: Boolean; FDuration: Cardinal; procedure Execute; override; public constructor Create(YieldMethod: TYieldMethod; IsProducer: Boolean; Priority: TThreadPriority); property Duration: Cardinal read FDuration; end; var x: Integer = 0; constructor TStarvationThread.Create(YieldMethod: TYieldMethod; IsProducer: Boolean; Priority: TThreadPriority); begin inherited Create(True); FYieldMethod := YieldMethod; FIsProducer := IsProducer; Self.Priority := Priority; //FreeOnTerminate := True; // don't care, irrelevant FDuration := 0; end; procedure TStarvationThread.Execute; var StartTick: Cardinal; begin // run threads on one core, core 0 SetThreadAffinityMask(GetCurrentThread, 1); if FIsProducer then begin //Sleep(50); // Sleep(500); // Sleep(1500); x := 1; // Producer sets x end else begin StartTick := GetTickCount; while x = 0 do begin case FYieldMethod of ymSleep0: Sleep(0); ymSleep1: Sleep(1); ymSwitchToThread: SwitchToThread; end; end; FDuration := GetTickCount - StartTick; end; end; function ThPriorityToString(ThPriority: TThreadPriority): string; var P: Integer; begin case ThPriority of {(*} tpIdle: Result := 'Idle'; tpLowest: Result := 'Lowest'; tpLower: Result := 'Lower'; tpNormal: Result := 'Normal'; tpHigher: Result := 'Higher'; tpHighest: Result := 'Highest'; tpTimeCritical: Result := 'TimeCritical'; {*)} else Result := 'Unknown'; end; Result := Result + '('; case ThPriority of {(*} tpIdle: P := THREAD_PRIORITY_IDLE; tpLowest: P := THREAD_PRIORITY_LOWEST; tpLower: P := THREAD_PRIORITY_BELOW_NORMAL; tpNormal: P := THREAD_PRIORITY_NORMAL; tpHigher: P := THREAD_PRIORITY_ABOVE_NORMAL; tpHighest: P := THREAD_PRIORITY_HIGHEST; tpTimeCritical: P := THREAD_PRIORITY_TIME_CRITICAL; {*)} else P := 999; end; Result := Result + IntToStr(P) + ')'; end; function YieldMethodToStr(YieldMethod:TYieldMethod):string; begin case YieldMethod of {(*} ymSleep0: Result := 'Sleep(0)'; ymSleep1: Result := 'Sleep(1)'; ymSwitchToThread: Result := 'SwitchToThread'; {*)} end; end; procedure RunStarvationTest(YieldMethod: TYieldMethod; ConsumerPriority, ProducerPriority: TThreadPriority); var Consumer, Producer: TStarvationThread; begin Writeln('Starting starvation test with ', YieldMethodToStr(YieldMethod), ', Consumer=', ThPriorityToString(ConsumerPriority), ', Producer=', ThPriorityToString(ProducerPriority)); x := 0; Consumer := TStarvationThread.Create(YieldMethod, False, ConsumerPriority); Producer := TStarvationThread.Create(YieldMethod, True, ProducerPriority); Consumer.Start; Producer.Start; Consumer.WaitFor; Producer.WaitFor; Writeln('Result: ', YieldMethodToStr(YieldMethod), ' time: ', Consumer.Duration, ' ms'); Writeln; end; begin try // Test Sleep(0) with equal priorities RunStarvationTest(ymSleep0, tpNormal, tpNormal); // Test Sleep(0) with different priorities RunStarvationTest(ymSleep0, tpNormal, tpLower); // Test Sleep(0) with different priorities RunStarvationTest(ymSleep0, tpLower, tpNormal); // Test Sleep(1) with equal priorities RunStarvationTest(ymSleep1, tpNormal, tpNormal); // Test Sleep(1) with different priorities RunStarvationTest(ymSleep1, tpNormal, tpLower); // Test Sleep(1) with different priorities RunStarvationTest(ymSleep1, tpLower, tpNormal); // Test SwitchToThread with equal priorities RunStarvationTest(ymSwitchToThread, tpNormal, tpNormal); // Test SwitchToThread with different priorities RunStarvationTest(ymSwitchToThread, tpNormal, tpLower); // Test SwitchToThread with different priorities RunStarvationTest(ymSwitchToThread, tpLower, tpNormal); Writeln('Done.'); Readln; except on E: Exception do Writeln(E.ClassName, ': ', E.Message); end; end.

Its result with and without delaying the producer by uncommenting the Sleep(50).. no starvation observed at all and the result is consistent with the delay introduced being Sleep(50), Sleep(500) or Sleep(1500)

Starting starvation test with Sleep(0), Consumer=Normal(0), Producer=Normal(0) Result: Sleep(0) time: 0 ms Starting starvation test with Sleep(0), Consumer=Normal(0), Producer=Lower(-1) Result: Sleep(0) time: 0 ms Starting starvation test with Sleep(0), Consumer=Lower(-1), Producer=Normal(0) Result: Sleep(0) time: 0 ms Starting starvation test with Sleep(1), Consumer=Normal(0), Producer=Normal(0) Result: Sleep(1) time: 0 ms Starting starvation test with Sleep(1), Consumer=Normal(0), Producer=Lower(-1) Result: Sleep(1) time: 15 ms Starting starvation test with Sleep(1), Consumer=Lower(-1), Producer=Normal(0) Result: Sleep(1) time: 0 ms Starting starvation test with SwitchToThread, Consumer=Normal(0), Producer=Normal(0) Result: SwitchToThread time: 0 ms Starting starvation test with SwitchToThread, Consumer=Normal(0), Producer=Lower(-1) Result: SwitchToThread time: 0 ms Starting starvation test with SwitchToThread, Consumer=Lower(-1), Producer=Normal(0) Result: SwitchToThread time: 0 ms Done.4 hours ago, Tommi Prami said:No silver bullets...

True, there is no silver bullet, but Sleep(1) is better for completely different reason and it is impossible to be less than 1ms delay, unless with my vivid imagination your PC has no other threads running, like the OS has nothing else to do, so it will be faced with either

1) put the CPU/core to sleep, i mean really sleep signal and reduce the power for the core.

2) ignore the delay continue, even in this case, the article result can't be reproduce because we have two threads not one, hence the producer will execute releasing the consumer.

ps: these tests are for specific measurement, and i know they are not very helpful in real life, but they are accurate in the delays and shows how SwitchToThread is faster then Sleep(0), due the reduced context switch with threads from different processes, as for mixing the priorities, well this is way different subject and longer, but even so as tests shows it is irrelevant with Windows 10 and modern CPU, my CPU is Sandy Bridge so around 15 years old.

I would love to see result form modern and different CPUs, just for general information, also from different Windows versions, that would be nice, the discussion of how much relevant the test in real life doesn't concern me, as i am trying to replicate that article strange result.

-

6 hours ago, alogrep said:Anybody has any suggestion?

Sure, if Task Manager is causing freeze then

1) the system is broken and faulty and missing components,

2) it could be that or your devices is infected with malicious software, or

3) have you installed 17 Antivirus ? then uninstalled some or all of them ? this symptoms could be coming from rouge left over filter drivers,

Fix your Windows, Delphi and Embarcadero (in this case) has nothing to do with your problem.

-

Dear Angus try the RAW approach for fun, and see how those can be f*** brutal.

-

34 minutes ago, Angus Robertson said:I'd mess with the connection of hackers, after detection, but this requires resources I don't want to waste,

Right, but doesn't really cost your server that much resource ?

To put it in different way, without too much explanation of the last one i point to in (D) , see, raw packets are always useless with TCP as any switch/router will drop them as they don't belong to any steam, namely established TCP stream, but and here big bug the connection is there and established, the stream is legit, and your packet will reach its destination, can cause resource depletion on their part as you closed ( terminate abruptly) the connection after sending that packet or similar ones, even you keep yours open and repeat this behavior it becomes more like contest of whos bone is tougher, your server with usual resources, their part with havoc and unpredictable behavior to manage.

-

25 minutes ago, Renate Schaaf said:1 hour ago, Kas Ob. said:2) the duration at 1000 and i am not talking about the timestamp but the relevancy of nominator and video frames is 1000, i tried to tweak things and it didn't change, even used the recommended 10m instead of 1m you are using, still didn't change, so this also might be like above a setting or a constrained bit/frame/packet limitation specific to this very codec, one test video is 60gps with 200 duration, the output is 1000 at 30fps, while it should be 400.

You lost me here. What 10m, and what's gps?

Honestly i lost my self reading that, fps not gps, (stupid auto correct and clumsy fingers), and 10m=10000000 vs 1m =1000000, as dominator for the rate at setup.

-

1

1

-

-

@Angus Robertson I know a few tricks, funny ones and one might say genius while laughing at them, used some and still using in production, but they are not for public or posting in a forum,

so i am writing in private to you, you can do them as they are very simple, they are just like step back a little and look at the big picture.

-

13 hours ago, Renate Schaaf said:I think I solved the audio-syncing ... kind of.

In 10 tests i did, it is synced and difference is at the beginning is 4 ms and in the middle 4ms and at the end still 4ms, that is very accurate considering the acceptable desyncing between audio and video is constrained and small;

https://video.stackexchange.com/questions/25064/by-how-much-can-video-and-audio-be-out-of-sync

What is still perplexing me is;

1) why the frames are grouped, so i added something like this "OutputDebugString(PChar('Audio: '+IntToStr(AudioSampleDuration)));" before SafeRelease, same for video, the debug output is clearly showing an interleaved frames one by one ! beautiful interleaving, yet the result video frames are grouped, so it might be something has to do with WMF and its codec or missing some some settings somewhere, in other words you code is doing it right.

2) the duration at 1000 and i am not talking about the timestamp but the relevancy of nominator and video frames is 1000, i tried to tweak things and it didn't change, even used the recommended 10m instead of 1m you are using, still didn't change, so this also might be like above a setting or a constrained bit/frame/packet limitation specific to this very codec, one test video is 60gps with 200 duration, the output is 1000 at 30fps, while it should be 400.

14 hours ago, Renate Schaaf said:This causes a gap in the audio-stream and a phase-shift in the timing. I have a notorious video where you can actually hear these gaps after re-encoding. If I transform the audio to .wav first, the gaps are gone. One could try to safekeep the thrown-away bytes and pad them to the beginning of the next sample, fixing up the time-stamps... Is that what you were suggesting, @Kas Ob.? Well, I don't think i could do it anyway :).

Yes in some way, see if there is gap then the audio is distorted and the final video is bad or low quality, so yes decoding the audio into PCM from some exotic audio format, then use more standard audio codec from WMF will be the best thing to keep the quality.

Anyway, here a nice answer on SO leading to very beautiful SDK, you might find it very useful

https://stackoverflow.com/questions/41326231/network-media-sink-in-microsoft-media-foundation

Now, why i keep looking at this drifting in audio and video you might ask,

the answer is long time ago i wanted to know how those media players could read from slow HDD huge chunks of data and decode them then render them, everything is irrelevant here except one behavior you can watch, they like WMP and VLC do strange thing, they read the header of the video, then load huge buffers form the beginning then seek to the end of that file then again load huge chunk, from the end they try to see how much the streams drifted, only after that they play, those players saw it all, so they do tricks of resyncing at there own, when the video/audio stream are desynced and it is possible then adjust and cover it (fix it)

Why is this is relevant here if all modern and used players doing this and fix things, because this will fail when you stream that video there is no way to seek to the end, so the player will play what he get, being WebRTC, RTMP, RTSP...

Think video conference or WebCam or even security cams being received by server that will encoded and save the videos while allowing the user to monitor one or more cam online, audio and video syncing is important here, and players tricks will not help.

Anyway, nice and thank you, you did really nice job.

-

1

1

-

-

2 minutes ago, Vincent Parrett said:Interesting, similar to my old library (except that I handle stderr) - I will have to study it further as I have been considering using job objects since seeing a talk on them at DelphiSummit (I have looked at them in the past but never implemented them).

This version doesn't, i have others, this one i use to capture debug from different tools, parse the output then run command command, it is bidirectional, i use it for building applications instead of batch files, so it should be able to handle InnoSetup and WinLicense ..etc

-

Mine showed difference in timing with when used 16kb.

-

1 minute ago, Vincent Parrett said:@Kas Ob. not sure what hardware he is using but on my 5900X ssd system I am seeing 16-17s for both his library and mine.

I just sent you my unit.

-

@pyscripter i don't have your hardware or anything even close to it, so when you show a command like "dir C:\Windows /s" takes less than 20 seconds, well this amazing,

Can you confirm if both libraries on the same reading buffer length, your i think yours at 16kb by default, while mine is left fixed by a constant at 4kb, could that have such huge difference ? in theory it might as "dir C:\Windows /s" do takes minutes on my machine, meaning the output is huge and again the flush operations could causing this difference.

-

Here a suggestion and highly important;

Make sure all call backs from the system 100% safe against exception, you can't raise an exception or allow the RTL to raise one, make sure to encapsulate the code with try..except and no re-raise.

-

14 minutes ago, pyscripter said:Although, it does not look like it, the alertable wait does the same.

I very well familiar with the alertable wait and APC, i simply have different point view of them, they are fast and nice but also dangerous namely where there is a chance for an exception to raise,

See, Delphi RTL can raise exception in so many places starting form the Memory Manager to most functionality, one exception raise and things can go very wrong, and most dangerously if any parameter passed or utilized where stack allocated.

Here a very nice article about something very similar, and remember this happen with Unity and that code was shipped with millions if not billions within applications and games running by end users;

-

32 minutes ago, pyscripter said:But, I do not see any reason your code is faster or otherwise better than the one used in pascal-process.

I didn't say mine faster, all what i pointed to is centralizing the 3 important handles in one loop, one thread, short and direct code, and that is it, although it is very strange it is performing slower, as there is no locking at all.

Anyway, glad you had the look and thank you !

-

Well the forum is not allowing me to send more text in private, but here the one last important thing to use when " FProcess.SyncEvents := True;"

Hope you are OK with suggestion of such style !

-

49 minutes ago, pyscripter said:You are welcome to create PRs, open issues, make suggestions etc.

In case i don't remember my Github password and don't use it ?!

Well, if you are OK with suggestion here then please take what you see fit, if not just ignore.

Thoughts on the implementation;

1) Overlapped is well, we all know, but they do exist to provide specific usage, they allow converting simple IO operation from right-now synchronous or asynchronous to something fire now and poll later, if you need to block and wait on one operation then you lost the need for using overlapped in the first place, as example you can issue Read over socket or file, either synchronous or asynchronous, but with overlapped you can issue 10 or 100 read file then poll the status later, or even block on 64 of them with one thread and get notified when such slow operation finished, also one great advantage is you can unify multiple and different operation with one thread, now to the point, if you are using overlapped IO operation then block with WaitForSingleObject or WaitForSingleObjectEx then you are missing the advantage of overlapped operation, you could use block read and it will behave the same,

suggestion;

Don't use ReadCompletionRoutine, not here, it is overkill.

Issue ReadFile once not any more the whole unit could have one read inside a loop, read then use WaitForMultipleObjects or WaitForMultipleObjectsEx if you wish, because here we can monitor not just the read operation but an event to signal thread exit and the handle if the child process, all in one loop simple, short, efficient and straight to the point.

2) Terminating the child and its spawns, TerminateProcessTree will do most of the time unless one of the child had tweaked its security and privilege's, now it will stay there, there is better solution than terminating the child tree or sending signals, it is https://learn.microsoft.com/en-us/windows/win32/procthread/job-objects

Using Job objects is way easy than it look, and because i have many versions of using Pipes named or unnamed, i picked one and DM you, will not paste it here, it is vetted and used but not in your thread and your code, it is for you look and cherry pick what you like form it.

ps;

I use this command to measure time for console command

PipeIPC.SendData('cmd /v:on /c "set start=!time! && dir j:\android /s && set end=!time! && echo Start time: !start! && echo End time: !end!"'#13#10);

the unit i sent is fully bidirectional, and this command will show the time that a command like "dir j:\android /s" takes, one problem here is for this to work we need to spawn another console with /v:on as i couldn't make "setlocal enabledelayedexpansion" work, anyway , that command will spawn another console and execute the dir command, and what is interesting is that the time reported is different from the real console ran from Windows Explorer, your TProcess and my unit execute that command

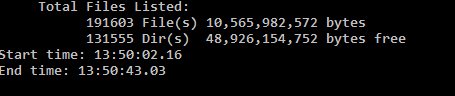

From standalone console the result of that dir on my fastest drive j:

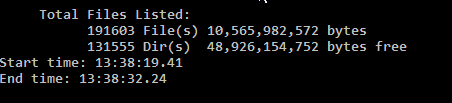

and using my unit which was almost the same as yours, (your last changes refuse to compile as AttachConsole is not declared in older Delphinos)

And that is due the flush the console that executing the command had to flush, Delphi console application generally are slower due the excessive flush, but in this case and because the child is sending so many data, Delphi is flushing once per 4k, and that is really nice effect.

-

@pyscripter Hi,

Are you up for suggestions ? even if they are not so small touching the core approach with overlapped operation (on Windows) ?

-

Debugging and tracking the OnChecked event, the behavior has nothing to do with Delphi and its VCL, it is triggered by Windows message, so you have to use a workaround about that, something like what Remy suggested.

-

1

1

-

![Delphi-PRAXiS [en]](https://en.delphipraxis.net/uploads/monthly_2018_12/logo.png.be76d93fcd709295cb24de51900e5888.png)

TSslCertTools for generate CSR

in ICS - Internet Component Suite

Posted

I highly recommend using OID instead of NID, they are documented, there is so many internet resources and DB populate them, and most important you can find the needed entry by its OID from any certificate or CSR.

In this page there is few lines on how to convert OID in its text formatted syntax into OBJ

https://docs.openssl.org/1.0.2/man3/OBJ_nid2obj/#examples

then use OBJ instead of NID, as there is the same equivalent APIs for each of them

Also important note here, "Set" might not be acting as "Add" and i can't find details about this, but if there is Add then it should be used, and not depending on Set

From translated code from C++, this code might work, i say might as i didn't test it, just translated it

Now as suggestions for Angus, it might be useful to add generic functions to this, this will be future proof, allowing any non essential entries or exotic objects to be added or enumerated, in other words allow customization in its purest way.

It could use NID, Text and OID, implement once and can be used whenever request like this pop then the solution is easy, find the OID (or text) for the entry then add it or read it using the custom entry access

givenName = 2.5.4.42 surname = 2.5.4.4https://oid-base.com/cgi-bin/display?oid=2.5.4.4&submit=Display&action=display

https://oid-base.com/cgi-bin/display?oid=2.5.4.42&submit=Display&action=display

Using NID is also nice but only if it is already Known and declared in OpenSSL Pascal headers, so it will be limited.