Renate Schaaf

-

Content Count

139 -

Joined

-

Last visited

-

Days Won

6

Posts posted by Renate Schaaf

-

-

I think I solved the audio-syncing ... kind of.

First observation: Audio and video are perfectly synced if the audio comes from a .wav-file. You can check this using the optimal frame-rates 46.875 or 31.25. So for optimal synching, compressed audio should be converted to .wav first. I have added a routine in uTransformer.pas which does this. In the demo there are some checkboxes to try this out.

Second observation: For compressed input the phase-shift in audio happens exactly at the boundaries of the IMFSamples read in. So this is what I think happens: The encoder doesn't like the buffer-size of these samples and throws away some bytes at the end.

This causes a gap in the audio-stream and a phase-shift in the timing. I have a notorious video where you can actually hear these gaps after re-encoding. If I transform the audio to .wav first, the gaps are gone. One could try to safekeep the thrown-away bytes and pad them to the beginning of the next sample, fixing up the time-stamps... Is that what you were suggesting, @Kas Ob.? Well, I don't think i could do it anyway :).

So right now, first transforming audio-input to .wav is the best I can come up with. For what I use this for it's fine, because I mix all the audio into one big .wav before encoding.

Renate

-

1

1

-

-

There is a new version at https://github.com/rmesch/Bitmaps2Video-for-Media-Foundation.

New stuff: Some rewrite of audio, making sure that gaps at the beginning of a stream are filled with silence. 2 optimized frame-rates for audio-synching, see below. Most importantly:

One can now run @Kas Ob.'s frame analysis from within the demo, if one enables the hidden tab "Analysis". I just made the lines a bit shorter, as the rest was just repeating the same values for all I tested, as far as I could see. The file ffprobe.exe needs to be in the same directory as DemoWMF.exe. ffprobe is part of ffmpeg-git-essentials.7z on https://www.gyan.dev/ffmpeg/builds/.

I spent a good amount of time trying to figure out what I can and what I cannot control about audio-synching, tracing into the relevant code and running the analysis. Results of audio-rethynching follow (beware, it's long):

The math is for audio-sample-rate of 48000 and the time units are all s.Audio-blockalign is always 4 Bytes for what I do.

There are at least 2 different meanings of "sample":

PCMSample: as in samples per second. ByteSize: Channels*BitsPerSample/8 = 2*16/8 = 4 Bytes. Time: 1/48000 s

IMFSample: Chunk of audio returned by IMFSourceReader.ReadSample. It contains a buffer holding a certain amount of uncompressed PCMsamples, and info like timestamp, duration, flags ...

The size of these samples varies a lot with the type of input. Some observed values:

.mp3-file 1:

Buffersize = 96 768 Bytes Duration = 0.504 (96768 bytes = 96768/4 PCMSamples = 96768/4/48000 s OK)

.mp3-file 2:

Buffersize = 35 108 Bytes Duration = 0.1828532 (35108/4/48000 = 0.182854166.. not OK)

.wmv-file:

Buffersize = 17 832 Bytes Duration = 0.092875 (17832/4/48000 = 0.092875 OK)Except for the first sample read, the values don't differ from sample to sample. Those are the samples I can write to the sinkwriter for encoding. Breaking them up seems like a bad idea. I have to trust MF to handle the writing correctly. The buffers seem to always be block-aligned. I've added some redundant variables in TBitmapEncoderWMF.WriteAudio so these values can be examined in the debugger.

A related quantity are audio-frames. Similarly to the video-stream the audio-stream of a compressed video consists of audio-frames. 1 audio-frame contains the compressed equivalent of 1024 PCMSamples. So:

AudioFrameDuration = 1024/48000 AudioFrameRate = 48000/1024

I can only control the writing of the video by feeding the IMFSamples of video and audio to the sinkwriter in good order. The samples I write to the sinkwriter are collected in a "Leaky-Bucket"-buffer. The encoder pulls out what it needs to write the next chunk of video. It hopefully waits until there are enough samples to write something meaningful. Problems arise if the bucket overflows. There need to be enough video- and audio-samples to correctly write both streams.

So here is the workflow, roughly (can be checked by stepping into TBitmapEncoderWMF.WriteOneFrame):

Check if the audio-time written so far is less than the timestamp of the next video-frame.

Yes: Pull audio-samples out of the sourcereader and write them to the sinkwriter until audio-time >= video-timestamp.

Looking at the durations above, one sample might already achieve this.

Write the next video-frame

RepeatIn the case of mp3-file 1 the reading and writing of 1 audio-sample would be followed by the writing of several video-samples.

The encoder now breaks the bucket-buffer up into frames, compresses them and writes them to file. It does that following its own rules, which I have no control over. Frame-analysis can show the result:

A group of video-frames is followed by a group of audio-frames, which should cover the same time-interval as the video-frames. In the output I have seen so far, the audio-frame-period is always 15 audio-frames. For video-framerate 30, the video-frame-period is 9 or 10 frames. Why doesn't it make the audio- and video-periods smaller? No idea. Guess that's the amount of info the players can handle nowadays, and these periods are a compromise between optimal phase-locking of audio- video- periods and the buffer-size the player can handle. Theoretically, at framerate 30, 16 video-frames should phase-lock with 25 audio-frames.

Here is one of those video-audio-groups. Video-framerate is 30.

video stream_index=0 key_frame=0 pts=39000 pts_time=1.300000 duration_time=0.033333

video stream_index=0 key_frame=0 pts=40000 pts_time=1.333333 duration_time=0.033333

video stream_index=0 key_frame=0 pts=41000 pts_time=1.366667 duration_time=0.033333

video stream_index=0 key_frame=0 pts=42000 pts_time=1.400000 duration_time=0.033333

video stream_index=0 key_frame=0 pts=43000 pts_time=1.433333 duration_time=0.033333

video stream_index=0 key_frame=0 pts=44000 pts_time=1.466667 duration_time=0.033333

video stream_index=0 key_frame=0 pts=45000 pts_time=1.500000 duration_time=0.033333

video stream_index=0 key_frame=0 pts=46000 pts_time=1.533333 duration_time=0.033333

video stream_index=0 key_frame=0 pts=47000 pts_time=1.566667 duration_time=0.033333

video stream_index=0 key_frame=0 pts=48000 pts_time=1.600000 duration_time=0.033333audio stream_index=1 key_frame=1 pts=62992 pts_time=1.312333 duration_time=0.021333

audio stream_index=1 key_frame=1 pts=64016 pts_time=1.333667 duration_time=0.021333

audio stream_index=1 key_frame=1 pts=65040 pts_time=1.355000 duration_time=0.021333

audio stream_index=1 key_frame=1 pts=66064 pts_time=1.376333 duration_time=0.021333

audio stream_index=1 key_frame=1 pts=67088 pts_time=1.397667 duration_time=0.021333

audio stream_index=1 key_frame=1 pts=68112 pts_time=1.419000 duration_time=0.021333

audio stream_index=1 key_frame=1 pts=69136 pts_time=1.440333 duration_time=0.021333

audio stream_index=1 key_frame=1 pts=70160 pts_time=1.461667 duration_time=0.021333

audio stream_index=1 key_frame=1 pts=71184 pts_time=1.483000 duration_time=0.021333

audio stream_index=1 key_frame=1 pts=72208 pts_time=1.504333 duration_time=0.021333

audio stream_index=1 key_frame=1 pts=73232 pts_time=1.525667 duration_time=0.021333

audio stream_index=1 key_frame=1 pts=74256 pts_time=1.547000 duration_time=0.021333

audio stream_index=1 key_frame=1 pts=75280 pts_time=1.568333 duration_time=0.021333

audio stream_index=1 key_frame=1 pts=76304 pts_time=1.589667 duration_time=0.021333

audio stream_index=1 key_frame=1 pts=77328 pts_time=1.611000 duration_time=0.021333pts stands for "presentation time stamp" and pts_time is of interest.

Video-time-intervall: from 1.300000 to 1.600000+0.033333=1.633333

Audio-time-intervall: from 1.312333 to 1.611000+0.021333=1.632333Audio is a bit ahead at the beginning and a tiny bit behind at the end. pts should be multiples of 1024, but they aren't hmm. The difference is still 1024, but they are phase-shifted. Phase-shift is 62992 mod 1024 = 528 (or -496).

The interval from a bit further ahead:

Video: From 8.066667 to 8.366667+0.033333=8.400000

Audio: From 8.053667 to 8.352333+0.021333=8.373666 pts-phase-shift: still 528 (-496)Audio is lagging behind.

To really see what is happening I will have to implement better statistics than just looking at things 🙂

One further test: I tried to phase-lock audio and video optimally:

VideoFrameRate: f. AudioFrameRate: 48000/1024, so f = 48000/1024 = 46,875. I've added this frame-rate to the demo.

Result: Perfect sync for the first audio-video group. In the middle of the second group the pts-phase-shift is again 528, and audio lags behind. For the rest of the groups the lag doesn't get bigger, it is always corrected to some degree. But the file should have identical audio and video timestamps in the first place!

There is another new frame-rate, which is the result of trying to phase-lock 2 video-frames to 3 audio-frames. 2/f = 3*1024/4800 results in f = 2*48000/3/1024 = 31.25

I will try to find out what causes the phase-shift in audio by parsing the ffprobe-output a bit more (sigh). Maybe generate a log-file for the samples written, too. (Sigh). No, so far it's still fun.

For those, who made it up to here: Thanks for your patience.

Renate

-

2

2

-

-

First of all, thanks. I'll be back as soon as I understand better what the analysis is showing, and then I might be able to do the "little code changes" you mention :). Just keep in mind, that the sinkwriter isn't giving you any control over dts, pts., or how video and audio are interleaved.

Renate

1 hour ago, Kas Ob. said:Anyway, that is all and good luck, it is your code and it is your call.

-

1

1

-

-

On 6/25/2025 at 1:02 PM, Anders Melander said:I would use:

raise Exception.CreateFmt('Fail in call no. %d of %s with result %x', [Count, ProcName, hr]);

for readability.

Hi, Anders,

CreateFmt uses internally

constructor Exception.CreateFmt(const Msg: string; const Args: array of const); begin FMessage := Format(Msg, Args); end;and help says that this version of Format isn't threadsafe, since it uses the locale for the decimal separator. Now I'm not using decimal-separators here, and I guess once the exception is raised in a thread thread-safety doesn't really matter anymore?

Another thing: Is %x.8 doing the same as IntToHex(hr,8)?

Renate

-

10 hours ago, Kas Ob. said:And to reiterate about how important this is, if you take long video, a fully correct and synced one, and split/extract the audio and video streams into files then used the same/similar code to generate mkv again the result will not be the same as the original and they will be desynced, (there is a chance that the result synced but it will be pure luck based on the parameter).

With a little change you can perform that test from within the demo, I think. Just put a little change into TBitmapEncodeWMF.AddVideo:

procedure TBitmapEncoderWMF.AddVideo( const VideoFile: string; TransitionTime: integer = 0; crop: boolean = false; stretch: boolean = false); var VT: TVideoTransformer; bm: TBitmap; TimeStamp, Duration, VideoStart: int64; begin if not fInitialized then exit; VT := TVideoTransformer.Create( VideoFile, fVideoHeight, fFrameRate); try bm := TBitmap.Create; try if not VT.NextValidSampleToBitmap(bm, TimeStamp, Duration) then exit; if TransitionTime > 0 then CrossFadeTo( bm, TransitionTime, crop, stretch); VideoStart := fWriteStart; // fill gap at beginning of video stream if TimeStamp > 0 then AddStillImage( bm, Trunc(TimeStamp / 10000), crop, stretch); while (not VT.EndOfFile) and fInitialized do begin BitmapToRGBA( bm, fBmRGBA, crop, stretch); bmRGBAToSampleBuffer(fBmRGBA); // !!!!! Change is here for extra hard sync-check: // WriteOneFrame( // VideoStart + TimeStamp, // Duration); // Write the decoded video stream in exactly the same way as AddFrame would. // I.e. with the same timestamps, not taking any timestamps from the // video-input WriteOneFrame( fWriteStart, fSampleDuration); if not VT.NextValidSampleToBitmap(bm, TimeStamp, Duration) then Break; end; // FrameCount*FrameTime > Video-end? (shouldn't differ by much) // if fWriteStart > VideoStart + TimeStamp + Duration then // Freeze((fWriteStart - VideoStart - TimeStamp - Duration) div 10000); finally bm.Free; end; finally VT.Free; end; end;Then transcode a movie on the Demo-Tab "Use TBitmapEncoderWMF as a transcoder". It uses the procedure TranscodeVideoFile, treating the video- and audiostream of an input-video as totally independent inputs. AddVideo decodes the video-stream into a stream of bitmaps, and the input-video is used again as audiofile. I encoded 40 minutes of "Fellowship of the Ring" this way, and did not see any desynching. You'll probably say that's no proof, and you'd be right, but it might be an indication that the problem isn't as severe.

Or the video player is just very good at making something usable out of the input.

-

On 6/25/2025 at 10:47 AM, Kas Ob. said:2) https://github.com/rmesch/Bitmaps2Video-for-Media-Foundation/blob/main/Source/uBitmaps2VideoWMF.pas#L1685 doesn't check, fail or warn about audio failure

I don't think that's quite true, if it fails the rest of WriteOneFrame isn't executed and in Line 1713 an exception is raised with errorcode hr. I could translate it into an EAudioFormatException, though, at the spot you indicate.

On 6/25/2025 at 10:47 AM, Kas Ob. said:The commented "if fAudioTime >= fAudioDuration then" is right and should be used

It was meant as an extra safety check, since the code already checks for EndOfStream, and that hasn't failed so far. But I've put it back in.

-

1

1

-

-

1 hour ago, Kas Ob. said:message popped up with "Calculated image time: 7049 ms" this is strange as the audio file is reported 9 seconds by my MPC-HC player

presentation time = image time + effect time (2000).

-

Thanks everybody. Now I have a lot to think about, a great chance to expand my horizon at the age of 74:). I'll fix the code. But then I need a bit of time to think. The info is great.

1 minute ago, Kas Ob. said:And how it didn't raise AV on Renate debugger, that is strange thing.

Because my poor debugger didn't run the code, because I didn't tell it to do so. I pasted that compatibility code in without checking, probably missed another piece. Mistake I won't do again. So I need to disable LogicalCompare for more compiler versions, or write a header for StrCmpLogicalW.

-

1

1

-

-

5 hours ago, Kas Ob. said:So even the video duration should be multiple of audio sample duration, consider this if you need your encoding %100 synced or the best it can be, in other word correct the video duration too, while audio sample rate is high number you have more flexible fps per second, the difference could be between 40 and 40.00002, yet if your video is 40.00002 i never saw i player show it as 40.00002 but always 40, this difference will prevent desyncing.

Hope that was clear and thank you again for the work on this and for the offering,

If I understand this right, I should match video-timestamp and duration to the closest blockalign-boundary of audio? If the difference in frame rate is really that negligable that should be doable, if I can get the math right :). Talk about not contributing, you just forced a new way of seeing things down my throat, not a small achievement.

-

Hi Anders,

Thanks for that. I hate the format-strings, because I can never remember the code for the place-holders. I had already thought before, that I should get used to them, though. Now I also see, that I forgot to use IntToHex(hr,8) 🙂

-

Hi Kas,

Good to see you again, and sorry for the long time of inactivity on my part. Thank you for the detailed input, which I need to digest first. Since you already invested so much thought, wouldn't you like to be a contributor? When I incorporate the changes you mention, I wouldn't even know how to list you as contributor. The issues you mention definitely need to be looked into. For the audio-part I was just glad it worked, and haven't put much thought into it lately. The wrong audio-duration was returned by some .vobs, which aren't really supported in the first place. The missing SafeRelease(pAudioSample) has caused memory leaks for me in a totally different context too, when I tried to write some code which simply plays an audio file through the default-device.

Renate

-

1

1

-

-

I've just uploaded an update to my project

https://github.com/rmesch/Bitmaps2Video-for-Media-Foundation

What it does:

Contains a VCL-class which encodes a series of bitmaps and video-clips together with an audio-file to video.The result is an .mp4-file with H264 or H265 compression together with AAC-audio.

It uses windows mediafoundation, which is usually contained in windows. Hardware-encoding is supported, if your graphics-card can do it.

Requires:

Headers for mediafoundation from FactoryXCode: https://github.com/FactoryXCode/MfPack

Windows 10 or higher

Encoder (MF-Transform) for H264/H265, usually come with the graphics-driver

Delphi XE7 or higher, if I haven't messed it up again, I've only got the CE and Delphi2006

(Win32 and Win64 should be working, but Win64 recently crashes for me with "The session was disconnected".)The demo-project shows some uses:

Record a series of canvas-drawings to video

Make a slideshow from image-files (.bmp,.jpg,.png,.gif) with music (.wav, .mp3, .wmv, ...) and 2 kinds of transitions

Insert a videoclip into a slideshow (anything that windows can decode should work)

Transcode a video-file including the first audio-stream.Improvements:

I think I now better understand how to feed frames to the encoder. With the right settings it makes stutter-free videos with good audio-video-synchronization. It's now usable for me in my "big" project, and I no longer need to rely on ffmpeg - dlls.More info in changes.txt.

Just try it, if you're interested, I'd be glad.

Renate

-

2

2

-

1

1

-

-

I have update the repo on GitHub

https://github.com/rmesch/Parallel-Bitmap-Resampler

Changes made to the "modern" VCL- and FMX-version in the folder BitmapScaling:

New resampling filters: Mitchell, Robidoux, RobidouxSharp, RobidouxSoft.

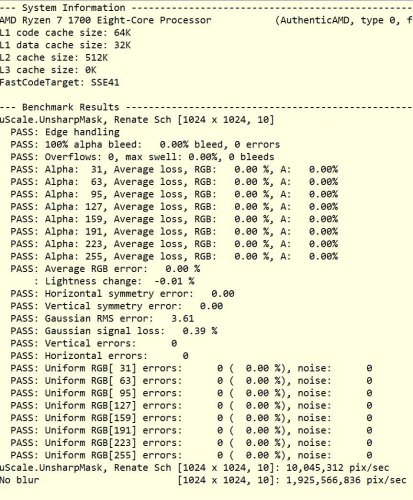

Simplified and corrected MakeGaussContributors in uScaleCommon.pas.@Anders Melander: It will pass the uniform color tests now. But it will fail the Gauss-RMS, since I changed to RadiusToSigma back.

Tried to make Gamma-correction a little more precise.On 10/18/2023 at 7:27 PM, Kas Ob. said:please don't waste your time on compatibility now

I tried nonetheless. You already spent so much time digging through that ancient attachment, give the repo a shot. I also added the option in DemoScale.dpr to use a test-bitmap similar to yours. I can't see any of the color-artefacts you describe, though.

-

30 minutes ago, prodeldpen said:I suppose there are effects of "round"ing that lead to this uncertainty.

Right. You want to add 1 frame of your animation at a time, but you use bme.addStillImage, which is meant for adding the same image for multiple frames. So it will only work (roughly) correctly if the ShowTime is much larger than the frame time of the movie.

Try to use bme.AddFrame instead.

36 minutes ago, prodeldpen said:customize the size of my movie to anything like 123 x 456

That just won't work, it's a codec limitation. You have to use at least even numbers, for some codecs the sizes might even have to be multiples of 4. I would stick to multiples of 4 to be on the safe side.

Another thing you might consider is to shorten the chain from animation to movie. To show the animation and make screenshots seems a bit roundabout to me, there must be a shorter way.

45 minutes ago, prodeldpen said:And eventually, is there an easy way to write meta data (author, etc.) to the file.

There must be, but I haven't yet bothered to look at it 🙂, maybe I will.

-

1

1

-

-

OK, Maple computed the following simplified filters, to implement them was just a matter of extending the TFilter-Enum. I'll update my repo some time tomorrow, the new filters need to be implemented in the demos. Right now I feel more like surviving a few more days on The Long Dark.

// The following filters are based on the Mitchell-Netravali filters with // restricting the parameters B and C to the "good" line B + 2*C = 1. // We have eliminated B this way and scaled the filter to [-1,1]. // See https://en.wikipedia.org/wiki/Mitchell%E2%80%93Netravali_filters const C_M = 1 / 3; // Mitchell filter used by ImageMagick function Mitchell(x: double): double; inline; begin x := abs(x); if x < 0.5 then Result := (8 + 32 * C_M) * x * x * x - (8 + 24 * C_M) * x * x + 4 / 3 + 4 / 3 * C_M else if x < 1 then Result := -(8 / 3 + 32 / 3 * C_M) * x * x * x + (8 + 24 * C_M) * x * x - (8 + 16 * C_M) * x + 8 / 3 + 8 / 3 * C_M else Result := 0; end; const C_R = 0.3109; // Robidoux filter function Robidoux(x: double): double; inline; begin x := abs(x); if x < 0.5 then Result := (8 + 32 * C_R) * x * x * x - (8 + 24 * C_R) * x * x + 4 / 3 + 4 / 3 * C_R else if x < 1 then Result := -(8 / 3 + 32 / 3 * C_R) * x * x * x + (8 + 24 * C_R) * x * x - (8 + 16 * C_R) * x + 8 / 3 + 8 / 3 * C_R else Result := 0; end;.... and so on. Just one function with different constants.

-

1

1

-

-

1 hour ago, Kas Ob. said:I browsed your code in "View file" in WinRar , i saw MakeContributors and AntiNLanczos and i confident you can just duplicate AntiNLanczos for all the above in the table with B and C or replace them with the calculated value like mine for faster calculation, while MakeContributors most likely doesn't need any change or a may be a little, i am sure you can solve this as it is way easier than how it sound and look.

I know! Just missed the B and C-values for the Robidoux in the table-image you post. And then I just have to rescale the functions to have support in [-1,1], make sure it's integral is 1. Bang. It plugs right in.

Wish I could edit my original post and delete the attachment, it's ancient now, and include a link to my GitHub-repo. The AntiNLanczos is a spline to approximate the antiderivative of Lanczos, all that stuff isn't needed anymore.

-

1

1

-

-

26 minutes ago, Kas Ob. said:but Min and Max are not inlined functions

They are in System.Math:

function Min(const A, B: Integer): Integer; overload; inline;I had coded it with ifs in a previous version, but I changed that after I noticed the inlining, looks a bit less stupid.

1 hour ago, Kas Ob. said:here are the raw formula

Oh, W is the weight you compute, and param is the x of the kernel. So you *did* post the kernel code, I was just too dense to see it. I think Maple and me can take it from there.

1 hour ago, Kas Ob. said:things for me fall in place when i looked at how imagemagick implemented

I tried to find something in their source code, but gave up. Looks like you had a bit more stamina :).

-

1

1

-

-

On 10/13/2023 at 12:15 PM, Kas Ob. said:And here are the parameters for Robidoux

What's the function these parameters need to be plugged into? All I can gather is that it might be some kind of cubic spline, and I don't feel like reading all of this guy's papers :). Would you mind posting the formula for the kernel?

-

MadExcept can check for frozen main thread, with or without debugger. Just surprised nobody mentioned it.

-

3

3

-

-

On 10/14/2023 at 3:42 PM, chmichael said:Anyone knows how to copy the Bits from a ID3D11Texture2D which it has different RowPitch than Bitmap.Width * 4 ?

It doesn't only depend to the pitch, but also on the pixel-format of the source. If that is BGR or BGRA, the following pseudo-code based on what you post

should be usable. If the color-order is different, like RGB or RGBA, you need to copy the single color-bytes. Best done by defining a record for the pixel.

// Pointers to Pixels in Source/Target to be copied var pPixS, pPixT: pByte; // Bytes per Pixel for the Source-Texture // would be 3 for BGR, 4 for BGRA // if the channels have a different order, like RGB, // then the single color bytes need to be copied. var BytesPerPixel: integer; // Common Width and Height of Source and Target var Width, Height: integer; for I := 0 to Height - 1 do begin pPixS := @FTexture.pData[FTexture.RowPitch * I]; pPixT := FBitmap.Scanline[I]; for j := 0 to Width - 1 do begin Move(pPixS^, pPixT^, BytesPerPixel); inc(pPixS, BytesPerPixel); inc(pPixT, 4); end; end;-

1

1

-

-

3 hours ago, Anders Melander said:I'm guessing your CPU is about twice as fast as mine

It's probably not. Your blur is slightly slower than mine for small radii. For large radii, yours is much faster. I'll mail you my benchmark-unit, then you can see for yourself.

-

1 hour ago, Kas Ob. said:To my past working on resampling and resizing up and down, i found that Mitchell and Robidoux filter is superior to Lanczos(3) in speed and quality specially in halo reduction and color defects.

Thanks very much for the input, I hadn't looked at those filters more closely before, should be easy to test them out.

18 hours ago, Anders Melander said:I'll include the test bench too.

Thank you!

Here is a first result for radius 10. It only passed the Gauss-RMS-test after I changed the sigma-to-radius-ratio to the same as yours. Need to give that a closer look.

For other radii my routine failed some of the uniform color tests, (and edge detection as a consequence,) so it's back to the drawing board for that.

-

1 hour ago, Alexander Sviridenkov said:delphihtmlcomponents.com/blur.zip

I managed to get it, source or not. For the same amount of "blurriness" my parallel version needs about 1.5 times the time of yours. Source would still be nice, I'm sure we'd learn something.

Renate

-

22 minutes ago, Anders Melander said:Premultiply, blur, unpremultiply.

I already did.

24 minutes ago, Anders Melander said:If you start by posting to your own repository I can do the work required to get it integrated into Graphics32.

I meanwhile learned how to do pull requests 🙂

Looking forward to the mailman!

Renate

![Delphi-PRAXiS [en]](https://en.delphipraxis.net/uploads/monthly_2018_12/logo.png.be76d93fcd709295cb24de51900e5888.png)

Bitmaps to Video for Mediafoundation

in I made this

Posted

I've been wondering about the same, maybe the setup isn't right. But I find it so hard to even figure out what settings you can specify.

You lost me here. What 10m, and what's gps?

Indeed, that looks interesting. Thanks, your links have already helped me a lot to understand better.

Renate