-

Content Count

1137 -

Joined

-

Last visited

-

Days Won

105

Posts posted by Dalija Prasnikar

-

-

51 minutes ago, corneliusdavid said:I don't put data into the code repository--nor are there API keys. Those are always kept totally separate and private. THAT is what I consider top secret.

I am not talking about what you put in your code repository and where and how you host it. I am talking about full AI integration with IDE where you may open security sensitive code in the IDE which then might be sent to AI and end up in training data without your knowledge. And you don't even have to open it. It may just be a part of your project where AI will go through your complete data to be able to give you relevant explanations and completions.

-

1

1

-

-

On 7/26/2024 at 3:06 AM, corneliusdavid said:Nothing would get automatically uploaded unless you hook it up and start using it. Just like Delphi has integration for git but you don't have to use git inside the IDE unless you tell it to and give it your account information.

Am I missing something?

Once something is integrated, there is always a possibility of bugs. You have a setting that says you are not allowing some feature, but the bug creeps in and the feature ends up enabled behind your back.

Another problem is that with AI, companies have incentive to make "bugs" and use anything they can get their hands on for training. Your data is the product. So while you may be fine with some parts of your code being used for training, there will be parts you will want to keep secret for security reason (if nothing else, various API keys and similar), but if you have AI integrated in the IDE, you can never be sure which parts of your code will be used for training and which ones are not.

For some people and companies the security concern is real and even slight possibility that some parts of their code or other information can leak through AI can be a huge risk.

-

2

2

-

-

You still need libcurl.dll if you want to use TCurlHTTPClient. It is a wrapper class for curl, but the curl itself is in the DLL. TCurlHTTPClient is THTTPClient descendant so you can easily switch between different HTTP implementations without changing other code.

-

43 minutes ago, Tommi Prami said:I bet this was the case way back at least, that exception in constructor was leading to memory leaks. Might be still wrong tpugh.

Raising exceptions in constructor never ever lead to memory leaks. Only if the code in destructor is bad and is not able to clean up partially initialized object instances. such situations may look like raising exception in constructor is at fault, while actually it is the destructor that needs to be fixed in such case.

-

4

4

-

-

32 minutes ago, dummzeuch said:I wasn't talking bout calling free for an object, but what you were describing next:

Sorry, I misunderstood then.

-

8 minutes ago, Kas Ob. said:Yes if an exception is raised in the constructor then the class itself is nil and will not leak memory, as pointed by @Dalija Prasnikar but if it is already allocated/created stuff then this stuff will leak so instead of depending on extra work to ensure non of the "stuff" had leaked just use best practice.

Sorry, but @Der schöne Günther is correct. If the exception is raised in the constructor, then destructor will be called to perform cleanup on already allocated stuff. Unless the destructor is broken (badly written), there will be no memory leaks. That is why destructor needs to be able to work properly on partially constructed object instances, without raising any additional exceptions. Exceptions raised within the destructor will cause irreparable memory leaks.

Best practice is that you can do whatever you want in the constructor in any order you whish (provided that you don't access things that are not yet created) and destructor must never raise an exception. Again you can also call inherited destructor in any order if you need to do that for some reason.

-

Just now, dummzeuch said:But there are always more complex examples where this won't work.

There are none. Free can always be called on nil instance. Running other code in the destructor where it is assumed that instance is not nil would require checking whether instance is nil before calling that code. In such code Free is often put within the Assigned block, not because it needs to be there but to avoid additional potentially unnecessary call when the instance is nil.

-

7 hours ago, alogrep said:Constructor TTCPEchoDaemon.Create; begin inherited create(False); FreeOnTerminate := true; sock:=TTCPBlockSocket.create; end; Destructor TTCPEchoDaemon.Destroy; begin inherited destroy; Sock.free; end;There are some issues in your code.

Your constructor can be simplified - the inherited thread constructor will handle raising exception if thread cannot be created. Also it does not make sense to construct suspended thread and then starting it in constructor because constructor chain will complete before thread runs because non suspended thread is automatically started in AfterConstruction method, not before.

Additionally, your code constructs sock after you have called Resume, where it would be possible for thread to access instance which is not created yet (this is very slight possibility, but it is still possible).

You should also destroy all objects in destructor, after you call inherited destroy, which will guarantee that thread is no longer running at that point and preventing access to already destroyed sock object.

Next, since you are creating self destroying thread, such threads don't have proper cleanup during application shutdown and will just be killed by OS if they are still running at that time. If that happens there will be memory leaks. Explicitly releasing the thread would be better for controlled shutdown and thread cleanup.

-

7 minutes ago, dummzeuch said:constructor TBla.Create; begin inherited; FSomeHelperObject := TSomeClass.Create; end; destructor TBla.Destroy; begin FSomeHelperObject.Free; // <== this might cause an AV if FSomeHelperObject hasn't been assigend inherited; end;

The above code is fine. Free can be called on nil object reference. Testing whether it is assigned before calling Free is redundant.

-

2

2

-

-

57 minutes ago, JonRobertson said:Word of caution, TLightweightMREW is not merely a lightweight equivalent of TMultiReadExclusiveWriteSynchronizer as it has different behavior.

Most notably, write lock is not reentrant on TLightweightMREW and acquiring write lock from the same thread twice will cause deadlocks on Windows platform and will raise exception on other platforms.

When it comes to TMultiReadExclusiveWriteSynchronizer it is implemented as MREW only on Windows and on other platforms it is exclusive lock.

-

1

1

-

-

27 minutes ago, chkaufmann said:The TBSItemProvider objects are used by any thread. And what David writes will work. I was thinking of something like this as well. The question for me is, how to do the cleanup: Either I have to call a function at the end of each thread or is there a global place to do this?

You would need to call method at the beginning code that runs in a thread that would add that thread ID into dictionary and create cache instance for that key. At the end of the thread code you would also call method that would be responsible for removing that key and cache from the dictionary. This add/ remove logic should be protected by some locking mechanism contained within TBSItemProvider instance and protected with try...finally block so that cleanup is done in case code in between raises an exception.

If you share other data within that instance between threads then any such access should also be protected by a lock, unless all threads are only reading the data.

-

3 hours ago, chkaufmann said:So this is the reason these two private variables should be "per thread".

Those are fields in a class, threadvar is only supported for global variables.

There is not enough context around what you are trying to do, so it is hard to say what is the most appropriate solution. From how it looks now, I would say that what @David Heffernan proposed looks most suitable. You would have to create and remove items in the dictionary when thread is created and destroyed.

Another question is, are you sharing other data within TBSItemProvider instance between threads which sounds like you are doing, and what kind of protection you have around that data. If it is not read only then sharing data is not thread-safe.

-

50 minutes ago, Anders Melander said:I have migrated countless JIRA instances from server to cloud and it has never been "a significant effort". The only problem I can think of is if they had modified their server so much that it couldn't be migrated automatically.

I don't know the details of their setup or the migration process, but it definitely took much longer than it was initially expected. If I remember correctly this coincided with the server outage, so it is possible that this also had an impact.

52 minutes ago, Anders Melander said:But the problem with Atlassian is that the suckage only ever increases.

This is right on the mark.

-

22 minutes ago, dummzeuch said:Maybe Embarcadero really care about the old bug reports so they stayed with JIRA in order to make it easier to import the old reports into the new system?

They most likely stayed with JIRA because their internal system also uses JIRA and even moving that internal JIRA to the cloud was significant effort, moving to something completely different would probably be more troublesome. Not to mention other possible differences in workflow.

Yes, JSM sucks big time, but this is because Atlassian made subpar product.

-

15 minutes ago, Uwe Raabe said:Also the often praised Delphi 7 runs pretty unstable in a modern environment

I had zero problems with Delphi 7 in modern environment. I still have it installed on Win 10. Works like it always did. I even used it in production until few years ago - I have my own installer and to keep it small I used Delphi 7.

-

It is hard to say which version is the most suitable and most usable because that will always depend on your code. If you are not using particular features then bugs in those features will not have any impact on your work although they may be showstoppers for others.

But historically, Delphi 7, 2007, and XE7 were pretty solid releases.

For newer ones, it is hard to say as there are plenty of changes and improvements in various areas, but also some issues. So it really depends on what you are doing.

-

1

1

-

-

NativeInt is 64-bit on Win64 so Integer overload is not appropriate (too small) there. If you want to force it to Integer overload you will have to typecast Integer(NewLine.Count - 1)

-

The only correct answer is to write Oranges

-

1

1

-

-

Stack Overflow Developers Survey for 2024 is live https://stackoverflow.com/dev-survey/start

Let's put Delphi on the map

-

8

8

-

-

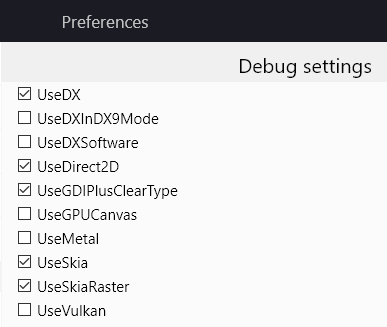

30 minutes ago, Hans♫ said:Enabling Vulkan requires to uncheck SkiaRaster, according to the description.

We are seeing a performance improvement and it runs pretty smooth when unchecking the SkiaRaster. If there is an additional performance improvement by enabling Vulkan, it is not clearly visible, so I'll need to measure the frame rate to find.

However, unchecking SkiaRaster also disables anti-alias when drawing a path, so it is not currently an option.

Maybe that could be solved somehow by accessing the Skia Canvas?

I don't know. The question is what do you get when you try enabling Vulkan. Do you have aliasing problem with Vulkan, too? Each of those checks uses completely different rendering technology. So Skia Raster canvas is a different one from Skia Vulkan.

-

-

On 5/2/2024 at 5:13 PM, Hans♫ said:This is with GlobalUseSkiaRasterWhenAvailable=true. Setting GlobalUseSkiaRasterWhenAvailable=false, then the framerate doubles, but then vector graphics is no longer antialiased.

What are your exact settings when the drawing is slow on Windows? All GlobalXXX values you are using?

-

19 hours ago, Hans♫ said:Both Intel and Silicon work "fine" when enabling Metal. However, we have not measured the framerate on animations or the painting speed, so here "fine" means that its high enough to not attract attention.

If all Macs work fine, then this is not due to tile based rendering (or at least not significantly), probably unified CPU/GPU memory is more relevant here. Another possibility would be that there is some difference in FMX platform specific code. But I cannot comment on that as I never looked at it.

-

3 hours ago, Rafal.B said:As you can see, the topic is not new, and EMB has not done anything about it (for now) 😞

The question is whether they can do something about it at all.

For start, Skia in Delphi operates on several layers of indirection (including some poor design choices) which also involve memory intensive operations on each paint (including reference counting). When you add FireMonkey on top that is additional layer. Now, all that does make Skia slower than it could be. But that problem exists on all platforms. ARM and especially Apple Silicon have better performance when it comes to reference counting, but that is probably still not enough to explain the difference.

Some of the difference can also be explained with juggling memory between CPU and GPU on Windows as those other platforms use unified memory for CPU/GPU. However, my son Rene (who spends his days fiddling with GPU rendering stuff) said that from the way CPU and GPU behaves when running Skia benchmark test, that all this still might not be enough to explain observed difference, and that tiled based rendering on those other platforms could play a significant role.

Another question is how Skia painting actually works underneath all those layers and whether there is some additional batching of operations or not.

@Hans♫ Do the Macs you tested it on have Apple Silicon? If they do then they also support tile based rendering.

As far a solutions are concerned, it is always possible to achieve some speedups by reusing an caching some objects that are unnecessarily allocated on the fly, those could be both in FMX code itself, or in how FMX controls are used and how many are them on the screen. I used to create custom controls that would cache and reuse some FMX graphic objects when painting instead of creating complex layouts with many individual FMX controls.

-

2

2

-

![Delphi-PRAXiS [en]](https://en.delphipraxis.net/uploads/monthly_2018_12/logo.png.be76d93fcd709295cb24de51900e5888.png)

Dynamic array used as a queue. Memory fragmentation?

in RTL and Delphi Object Pascal

Posted

If you are frequently adding/removing items from array and the maximal occupied memory is not an issue, I would use TList<T> instead of dynamic array which supports Capacity. Set the Capacity to the maximum number of elements you are expecting (if there are more underlying dynamic array will automatically grow). That will prevent frequent allocations/deallocations and improve performance. You may also use TQueue<T> which might be fit for your needs.

Whole array needs to be allocated in one place so there will be no memory fragmentation just because of that array reallocations.